AI Observability in Practice

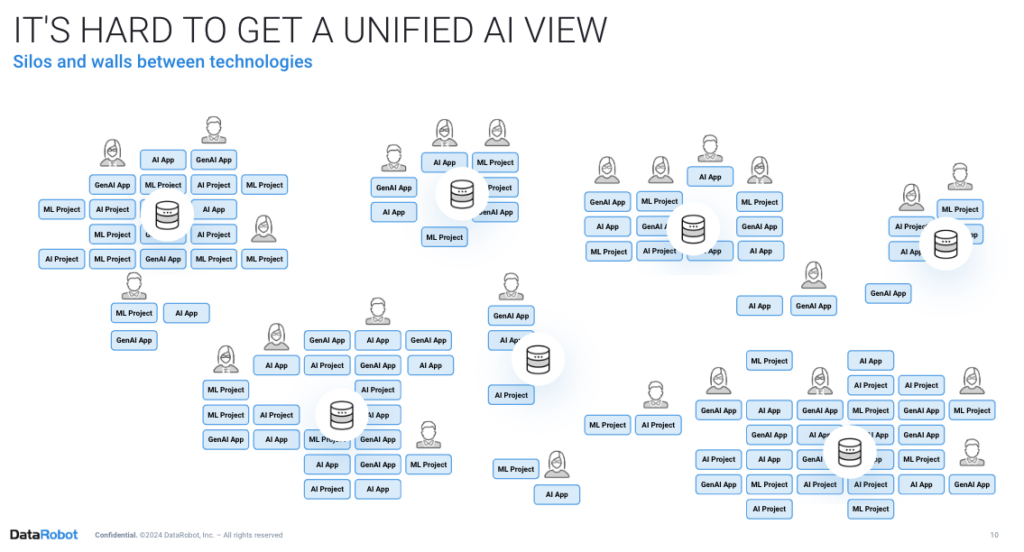

Many organizations start off with good intentions, building promising AI solutions, but these initial applications often end up disconnected and unobservable. For instance, a predictive maintenance system and a GenAI docsbot might operate in different areas, leading to sprawl. AI Observability refers to the ability to monitor and understand the functionality of generative and predictive AI machine learning models throughout their life cycle within an ecosystem. This is crucial in areas like Machine Learning Operations (MLOps) and particularly in Large Language Model Operations (LLMOps).

AI Observability aligns with DevOps and IT operations, ensuring that generative and predictive AI models can integrate smoothly and perform well. It enables the tracking of metrics, performance issues, and outputs generated by AI models –providing a comprehensive view through an organization’s observability platform. It also sets teams up to build even better AI solutions over time by saving and labeling production data to retrain predictive or fine-tune generative models. This continuous retraining process helps maintain and enhance the accuracy and effectiveness of AI models.

However, it isn’t without challenges. Architectural, user, database, and model “sprawl” now overwhelm operations teams due to longer set up and the need to wire multiple infrastructure and modeling pieces together, and even more effort goes into continuous maintenance and update. Handling sprawl is impossible without an open, flexible platform that acts as your organization’s centralized command and control center to manage, monitor, and govern the entire AI landscape at scale.

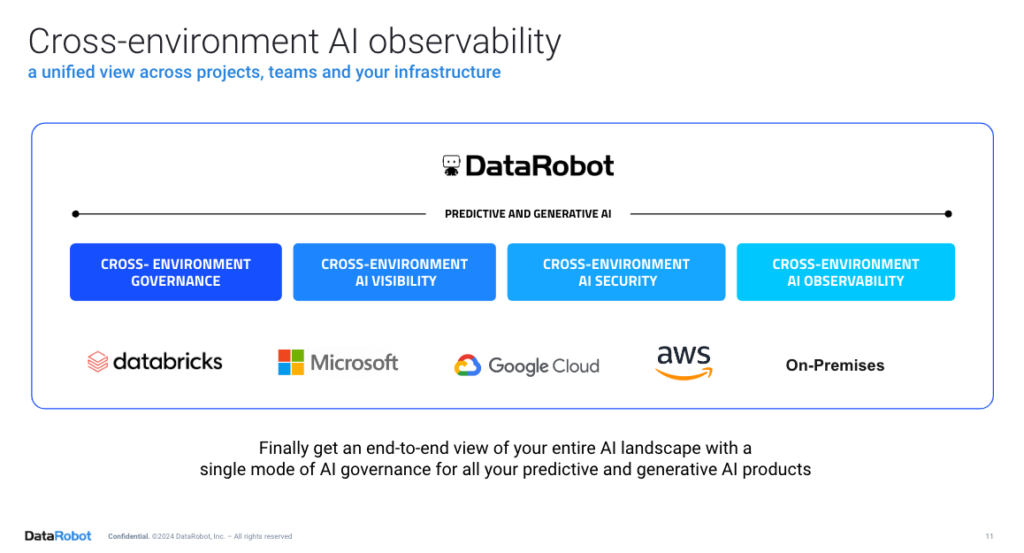

Most companies don’t just stick to one infrastructure stack and might switch things up in the future. What’s really important to them is that AI production, governance, and monitoring stay consistent.

DataRobot is committed to cross-environment observability – cloud, hybrid and on-prem. In terms of AI workflows, this means you can choose where and how to develop and deploy your AI projects while maintaining complete insights and control over them – even at the edge. It’s like having a 360-degree view of everything.

DataRobot offers 10 main out-of-the-box components to achieve a successful AI observability practice:

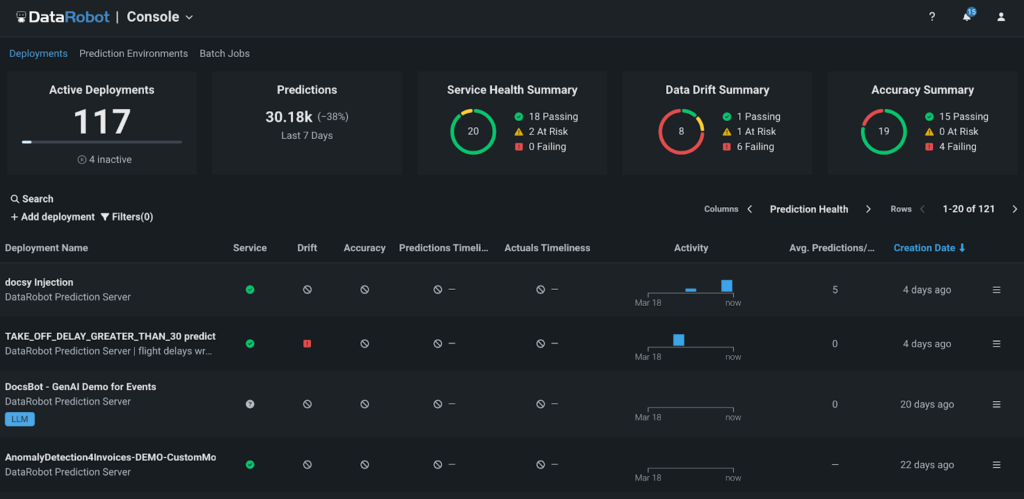

- Metrics Monitoring: Tracking performance metrics in real-time and troubleshooting issues.

- Model Management: Using tools to monitor and manage models throughout their lifecycle.

- Visualization: Providing dashboards for insights and analysis of model performance.

- Automation: Automating building, governance, deployment, monitoring, retraining stages in the AI lifecycle for smooth workflows.

- Data Quality and Explainability: Ensuring data quality and explaining model decisions.

- Advanced Algorithms: Employing out-of-the-box metrics and guards to enhance model capabilities.

- User Experience: Enhancing user experience with both GUI and API flows.

- AIOps and Integration: Integrating with AIOps and other solutions for unified management.

- APIs and Telemetry: Using APIs for seamless integration and collecting telemetry data.

- Practice and Workflows: Creating a supportive ecosystem around AI observability and taking action on what is being observed.

AI Observability In Action

Every industry implements GenAI Chatbots across various functions for distinct purposes. Examples include increasing efficiency, enhancing service quality, accelerating response times, and many more.

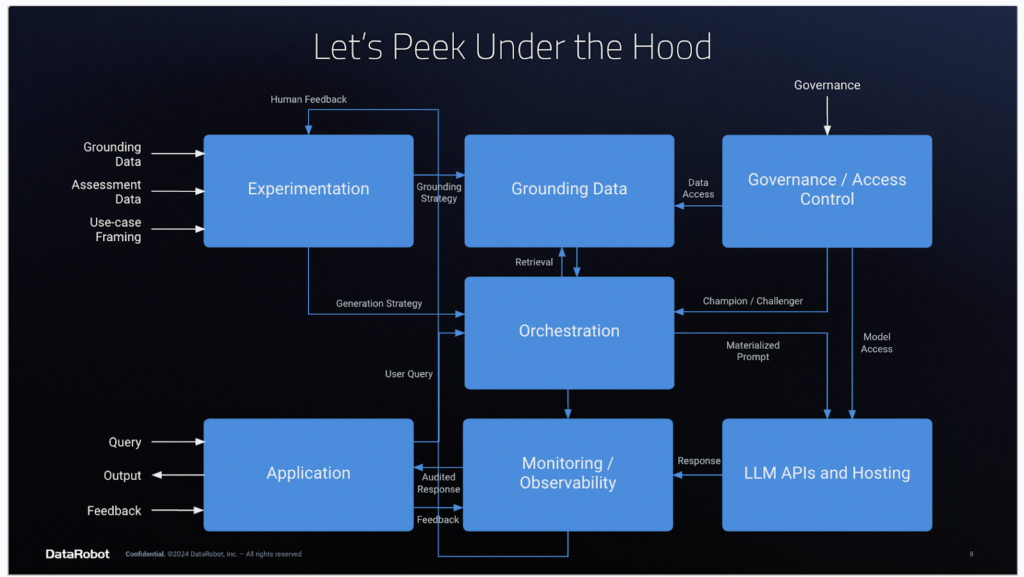

Let’s explore the deployment of a GenAI chatbot within an organization and discuss how to achieve AI observability using an AI platform like DataRobot.

Step 1: Collect relevant traces and metrics

DataRobot and its MLOps capabilities provide world-class scalability for model deployment. Models across the organization, regardless of where they were built, can be supervised and managed under one single platform. In addition to DataRobot models, open-source models deployed outside of DataRobot MLOps can also be managed and monitored by the DataRobot platform.

AI observability capabilities within the DataRobot AI platform help ensure that organizations know when something goes wrong, understand why it went wrong, and can intervene to optimize the performance of AI models continuously. By tracking service, drift, prediction data, training data, and custom metrics, enterprises can keep their models and predictions relevant in a fast-changing world.

Step 2: Analyze data

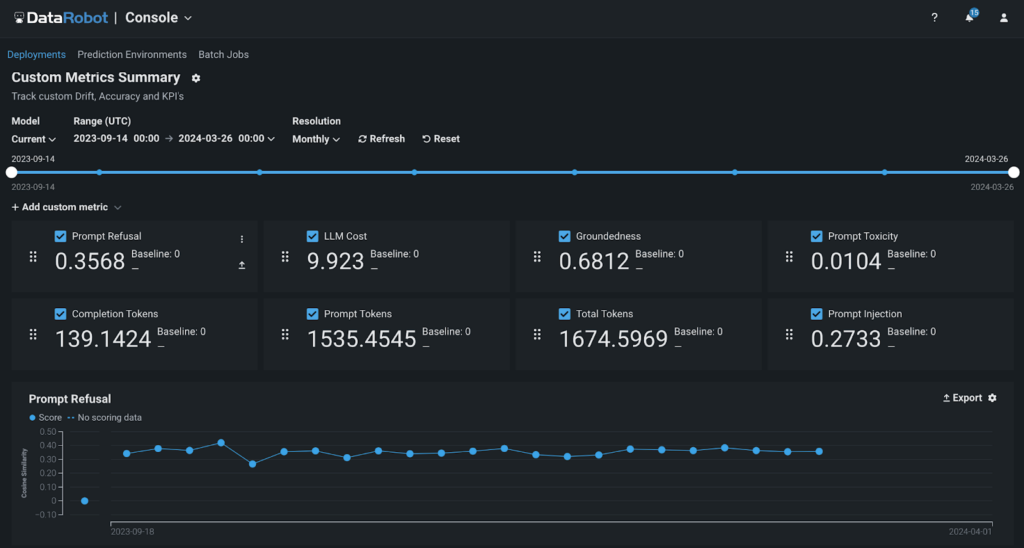

With DataRobot, you can utilize pre-built dashboards to monitor traditional data science metrics or tailor your own custom metrics to address specific aspects of your business.

These custom metrics can be developed either from scratch or using a DataRobot template. Use those metrics for the models built or hosted in DataRobot or outside of it.

‘Prompt Refusal’ metrics represent the percentage of the chatbot responses the LLM couldn’t address. While this metric provides valuable insight, what the business truly needs are actionable steps to minimize it.

Guided questions: Answer these to provide a more comprehensive understanding of the factors contributing to prompt refusals:

- Does the LLM have the appropriate structure and data to answer the questions?

- Is there a pattern in the types of questions, keywords, or themes that the LLM cannot address or struggles with?

- Are there feedback mechanisms in place to collect user input on the chatbot’s responses?

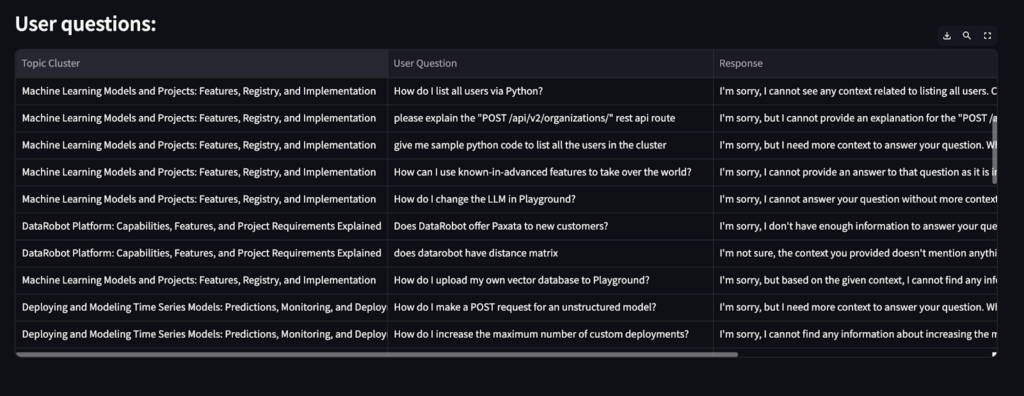

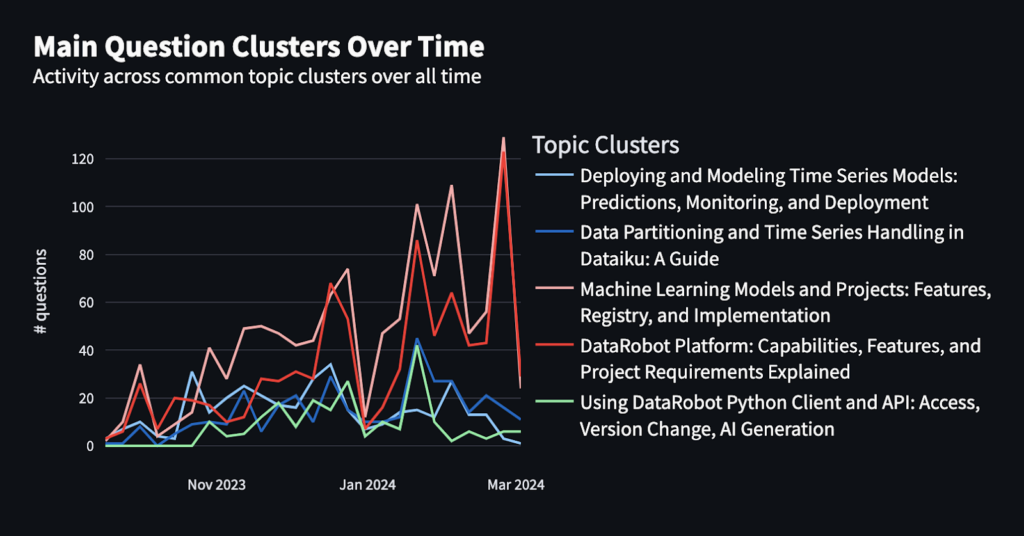

Use-feedback Loop: We can answer these questions by implementing a use-feedback loop and building an application to find the “hidden information”.

Below is an example of a Streamlit application that provides insights into a sample of user questions and topic clusters for questions the LLM couldn’t answer.

Step 3: Take actions based on analysis

Now that you have a grasp of the data, you can take the following steps to enhance your chatbot’s performance significantly:

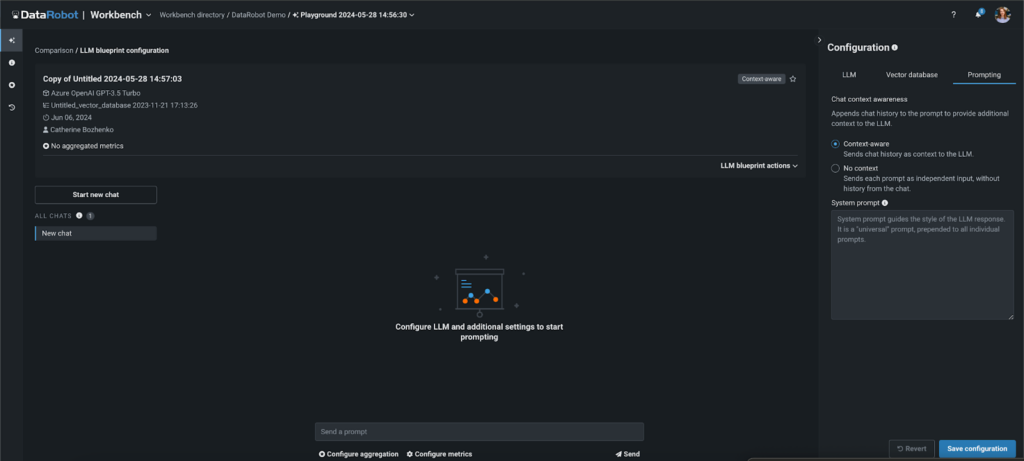

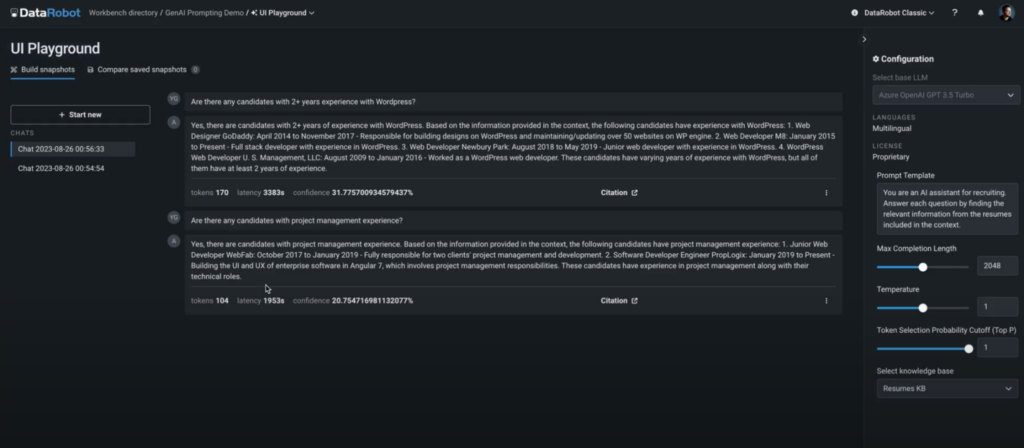

- Modify the prompt: Try different system prompts to get better and more accurate results.

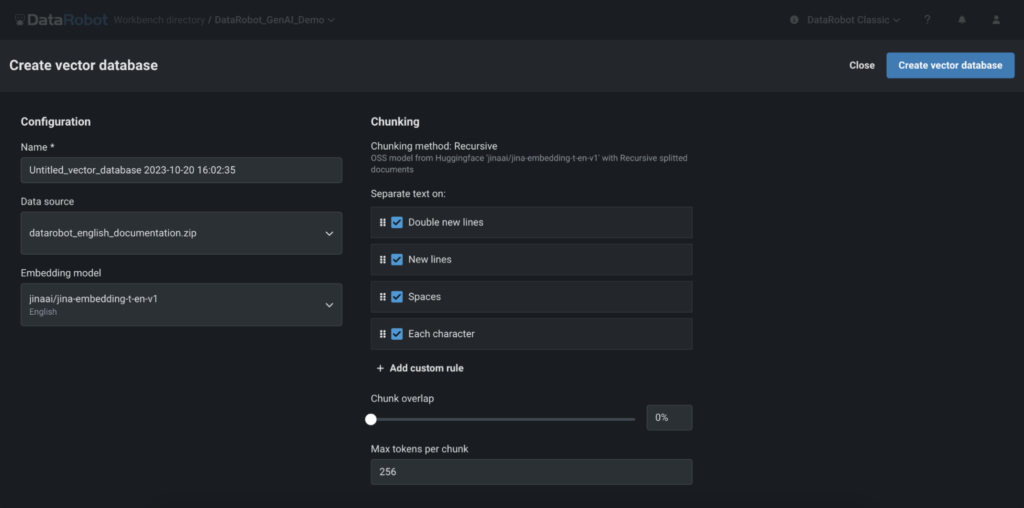

- Improve Your Vector database: Identify the questions the LLM didn’t have answers to, add this information to your knowledge base, and then retrain the LLM.

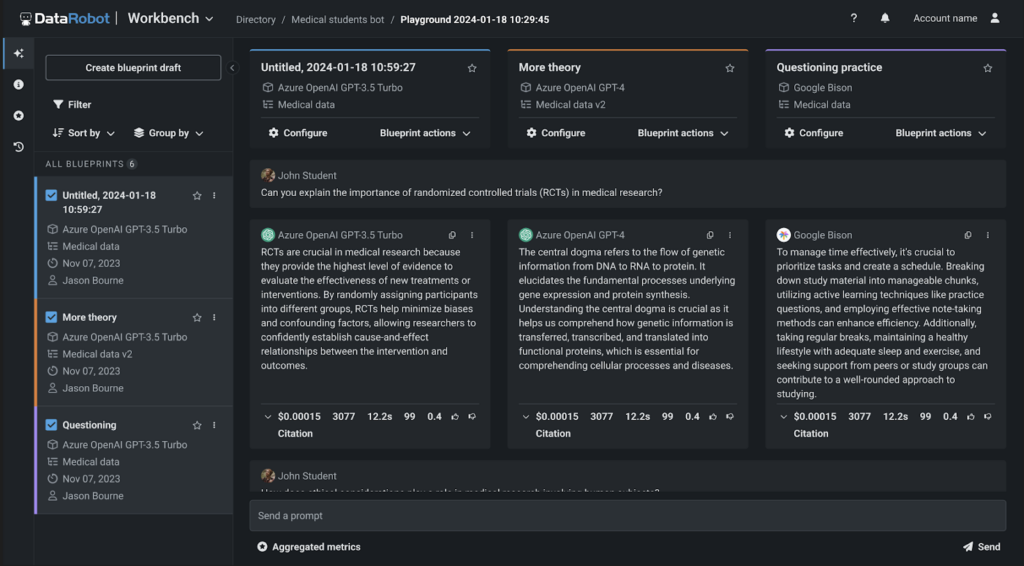

- Fine-tune or Replace Your LLM: Experiment with different configurations to fine-tune your existing LLM for optimal performance.

Alternatively, evaluate other LLM strategies and compare their performance to determine if a replacement is needed.

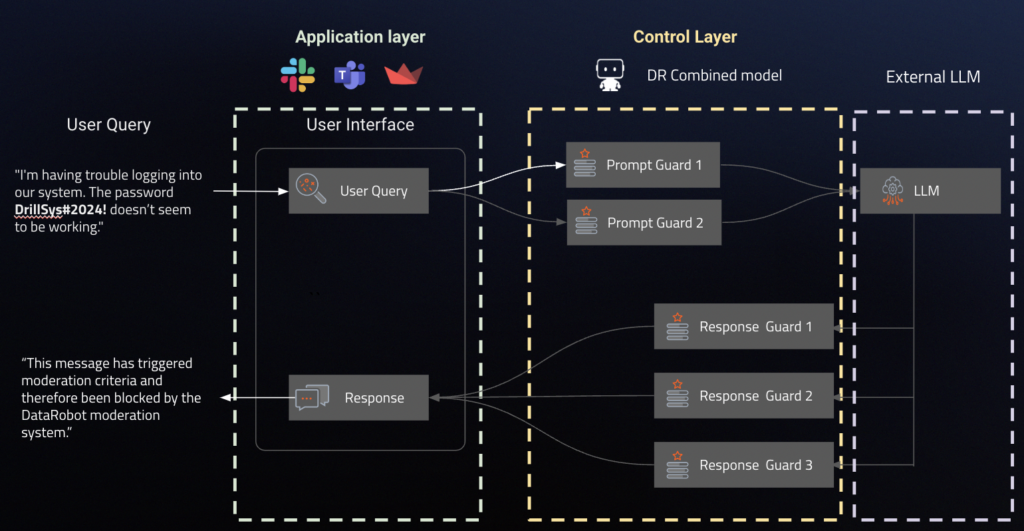

- Moderate in Real-Time or Set the Right Guard Models: Pair each generative model with a predictive AI guard model that evaluates the quality of the output and filters out inappropriate or irrelevant questions.

This framework has broad applicability across use cases where accuracy and truthfulness are paramount. DR provides a control layer that allows you to take the data from external applications, guard it with the predictive models hosted in or outside Datarobot or NeMo guardrails, and call external LLM for making predictions.

Following these steps, you can ensure a 360° view of all your AI assets in production and that your chatbots remain effective and reliable.

Summary

AI observability is essential for ensuring the effective and reliable performance of AI models across an organization’s ecosystem. By leveraging the DataRobot platform, businesses maintain comprehensive oversight and control of their AI workflows, ensuring consistency and scalability.

Implementing robust observability practices not only helps in identifying and preventing issues in real-time but also aids in continuous optimization and enhancement of AI models, ultimately creating useful and safe applications.

By utilizing the right tools and strategies, organizations can navigate the complexities of AI operations and harness the full potential of their AI infrastructure investments.

About the author

Atalia Horenshtien is a Global Technical Product Advocacy Lead at DataRobot. She plays a vital role as the lead developer of the DataRobot technical market story and works closely with product, marketing, and sales. As a former Customer Facing Data Scientist at DataRobot, Atalia worked with customers in different industries as a trusted advisor on AI, solved complex data science problems, and helped them unlock business value across the organization.

Whether speaking to customers and partners or presenting at industry events, she helps with advocating the DataRobot story and how to adopt AI/ML across the organization using the DataRobot platform. Some of her speaking sessions on different topics like MLOps, Time Series Forecasting, Sports projects, and use cases from various verticals in industry events like AI Summit NY, AI Summit Silicon Valley, Marketing AI Conference (MAICON), and partners events such as Snowflake Summit, Google Next, masterclasses, joint webinars and more.

Atalia holds a Bachelor of Science in industrial engineering and management and two Masters—MBA and Business Analytics.

Aslihan Buner is Senior Product Marketing Manager for AI Observability at DataRobot where she builds and executes go-to-market strategy for LLMOps and MLOps products. She partners with product management and development teams to identify key customer needs as strategically identifying and implementing messaging and positioning. Her passion is to target market gaps, address pain points in all verticals, and tie them to the solutions.

Kateryna Bozhenko is a Product Manager for AI Production at DataRobot, with a broad experience in building AI solutions. With degrees in International Business and Healthcare Administration, she is passionated in helping users to make AI models work effectively to maximize ROI and experience true magic of innovation.