At Microsoft Ignite, we’re introducing significant updates across our entire cloud and AI infrastructure.

The foundation of Microsoft’s AI advancements is its infrastructure. It was custom designed and built from the ground up to power some of the world’s most widely used and demanding services. While generative AI is now transforming how businesses operate, we’ve been on this journey for over a decade developing our infrastructure and designing our systems and reimagining our approach from software to silicon. The end-to-end optimization that forms our systems approach gives organizations the agility to deploy AI capable of transforming their operations and industries.

From agile startups to multinational corporations, Microsoft’s infrastructure offers more choice in performance, power, and cost efficiency so that our customers can continue to innovate. At Microsoft Ignite, we’re introducing significant updates across our entire cloud and AI infrastructure, from advancements in chips and liquid cooling, to new data integrations, and more flexible cloud deployments.

Unveiling the latest silicon updates across Azure infrastructure

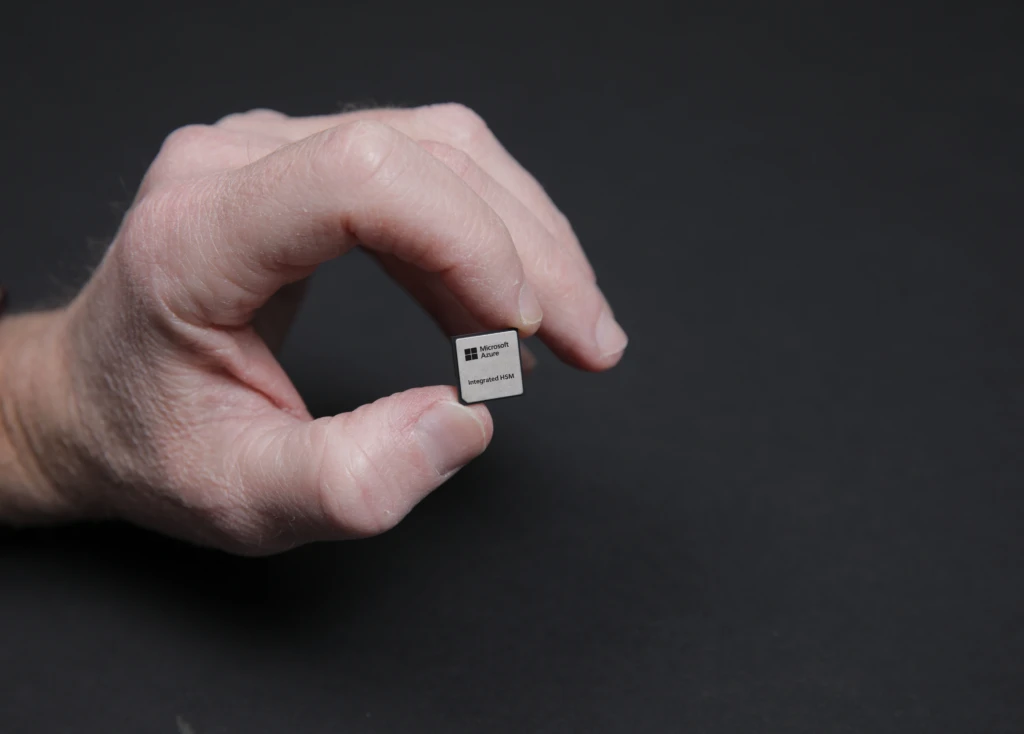

As part of our systems approach in optimizing every layer in our infrastructure, we continue to combine the best of industry and innovate from our own unique perspectives. In addition to Azure Maia AI accelerators and Azure Cobalt central processing units (CPUs), Microsoft is expanding our custom silicon portfolio to further enhance our infrastructure to deliver more efficiency and security. Azure Integrated HSM (hardware security module) is our newest in-house security chip, which is a dedicated hardware security module that hardens key management to allow encryption and signing keys to remain within the bounds of the HSM, without compromising performance or increasing latency. Azure Integrated HSM will be installed in every new server in Microsoft’s datacenters starting next year to increase protection across Azure’s hardware fleet for both confidential and general-purpose workloads.

We are also introducing Azure Boost DPU, our first in-house DPU designed for data-centric workloads with high efficiency and low power, capable of absorbing multiple components of a traditional server into a single dedicated silicon. We expect future DPU equipped servers to run cloud storage workloads at three times less power and four times the performance compared to existing servers.

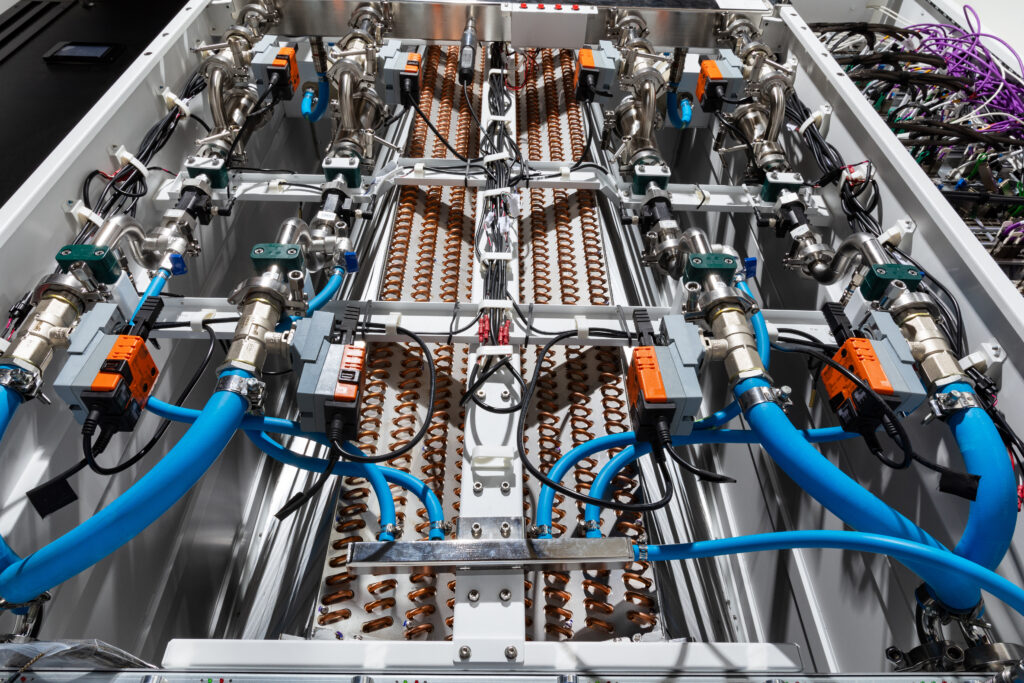

We also continue to advance our cooling technology for GPUs and AI accelerators with our next generation liquid cooling “sidekick” rack (heat exchanger unit) supporting AI systems comprised of silicon from industry leaders as well as our own. The unit can be retrofitted into Azure datacenters to support cooling of large-scale AI systems, such as ones from NVIDIA including GB200 in our AI Infrastructure.

In addition to cooling, we are optimizing how we deliver power more efficiently to meet the evolving demands of AI and hyperscale systems. We have collaborated with Meta on a new disaggregated power rack design, aimed at enhancing flexibility and scalability as we bring in AI infrastructure into our existing datacenter footprint. Each disaggregated power rack will feature 400-volt DC power that enables up to 35% more AI accelerators in each server rack, enabling dynamic power adjustments to meet the different demands of AI workloads. We are open sourcing these cooling and power rack specifications through the Open Compute Project so that the industry can benefit.

Azure’s AI infrastructure builds on this innovation at the hardware and silicon layer to power some of the most groundbreaking AI advancements in the world, from revolutionary frontier models to large scale generative inferencing. In October, we announced the launch of the ND H200 V5 Virtual Machine (VM) series, which utilizes NVIDIA’s H200 GPUs with enhanced memory bandwidth. Our continuous software optimization efforts across these VMs means Azure delivers performance improvements generation over generation. Between NVIDIA H100 and H200 GPUs that performance improvement rate was twice that of the industry, demonstrated across industry benchmarking.

We are also excited to announce that Microsoft is bringing the NVIDIA Blackwell platform to the cloud. We are beginning to bring these systems online in preview, co-validating and co-optimizing with NIVIDIA and other AI leaders. Azure ND GB200 v6 will be a new AI optimized Virtual Machines series and combines the NVIDIA GB200 NVL 72 rack-scale design with state-of-the-art Quantum InfiniBand networking to connect tens of thousands of Blackwell GPUs to deliver AI supercomputing performance at scale.

We are also sharing today our latest advancements in CPU-based supercomputing, the Azure HBv5 virtual machine. Powered by custom AMD EPYCTM 9V64H processors only available on Azure, these VMs will be up to eight times faster than the latest bare-metal and cloud alternatives on a variety of HPC workloads, and up to 35 times faster than on-premises servers at the end of their lifecycle. These performance improvements are made possible by 7 TB/s of memory bandwidth from high bandwidth memory (HBM) and the most scalable AMD EPYC server platform to date. Customers can now sign up for the preview of HBv5 virtual machines, which will begin in 2025.

Accelerating AI innovation through cloud migration and modernization

To get the most from AI, organizations need to integrate data residing in their critical business applications. Migrating and modernizing these applications to the cloud helps enable that integration and paves the path to faster innovation while delivering improved performance and scalability. Choosing Azure means selecting a platform that natively supports all the mission-critical enterprise applications and data you need to fully leverage advanced technologies like AI. This includes your workloads on SAP, VMware, and Oracle, as well as open-source software and Linux.

For example, thousands of customers run their SAP ERP applications on Azure and we are bringing unique innovation to these organizations such as the integration between Microsoft Copilot and SAP’s AI assistant Joule. Companies like L’Oreal, Hilti, Unilever, and Zeiss have migrated their mission-critical SAP workloads to Azure so they can innovate faster. And since the launch of Azure VMware Solution, we’ve been working to support customers globally with geographic expansion. Azure VMware Solution is now available in 33 regions, with support for VMware VCF portable subscriptions.

We are also continually improving Oracle Database@Azure to better support the mission-critical Oracle workloads of our enterprise customers. Customers like The Craneware Group and Vodafone have adopted Oracle Database@Azure to benefit from its high performance and low latency, which allows them to focus on streamlining their operations and to get access to advanced security, data governance, and AI capabilities in the Microsoft Cloud. We’re announcing today Microsoft Purview supports Oracle Database@Azure for comprehensive data governance and compliance capabilities that organizations can use to manage, secure, and track data across Oracle workloads.

Additionally, Oracle and Microsoft plan to provide Oracle Exadata Database Service on Exascale Infrastructure in Oracle Database@Azure for hyper-elastic scaling and pay-per-use economics. Additionally, we’ve expanded the availability of Oracle Database@Azure to a total of nine regions and enhanced Microsoft Fabric integration with Open Mirroring capabilities.

To make it easier to migrate and modernize your applications to the cloud, starting today, you can assess your application’s readiness for Azure using Azure Migrate. The new application aware method provides technical and business insights to help you migrate entire application with all dependencies as one.

Optimizing your operations with an adaptive cloud for business growth

Azure’s multicloud and hybrid approach, or adaptive cloud, integrates separate teams, distributed locations, and diverse systems into a single model for operations, security, applications, and data. This allows organizations to utilize cloud-native and AI technologies to operate across hybrid, multicloud, edge, and IoT environments. Azure Arc plays an important role in this approach by extending Azure services to any infrastructure and supporting organizations with managing their workloads and operating across different environments. Azure Arc now has over 39,000 customers across every industry, including La Liga, Coles, and The World Bank.

We’re excited to introduce Azure Local, a new, cloud-connected, hybrid infrastructure offering provisioned and managed in Azure. Azure Local brings together Azure Stack capabilities into one unified platform. Powered by Azure Arc, Azure Local can run containers, servers and Azure Virtual Desktop on Microsoft-validated hardware from Dell, HPE, Lenovo, and more. This unlocks new possibilities to meet custom latency, near real-time data processing, and compliance requirements. Azure Local comes with enhanced default security settings to protect your data and flexible configuration options, like GPU-enabled servers for AI inferencing.

We recently announced the general availability of Windows Server 2025, with new features that include easier upgrades, advanced security, and capabilities that enable AI and machine learning. Additionally, Windows Server 2025 is previewing a hotpatching subscription option enabled by Azure Arc that will allow organizations to install updates with fewer restarts—a major time saver.

We’re also announcing the preview of SQL Server 2025, an enterprise AI-ready database platform that leverages Azure Arc to deliver cloud agility anywhere. This new version continues its industry-leading security and performance and has AI built-in, simplifying AI application development and retrieval augmented generation (RAG) patterns with secure, performant, and easy-to-use vector support. With Azure Arc, SQL Server 2025 offers cloud capabilities to help customers better manage, secure, and govern SQL estate at scale across on-premises and cloud.

Transform with Azure infrastructure to achieve cloud and AI success

Successful transformation with AI starts with a powerful, secure, and adaptive infrastructure strategy. And as you evolve, you need a cloud platform that adapts and scales with your needs. Azure is that platform, providing the optimal environment for integrating your applications and data so that you can start innovating with AI. As you design, deploy, and manage your environment and workloads on Azure, you have access to best practices and industry-leading technical guidance to help you accelerate your AI adoption and achieve your business goals.

Jumpstart your AI journey at Microsoft Ignite

Key sessions at Microsoft Ignite

Discover more announcements at Microsoft Ignite

Resources for AI transformation