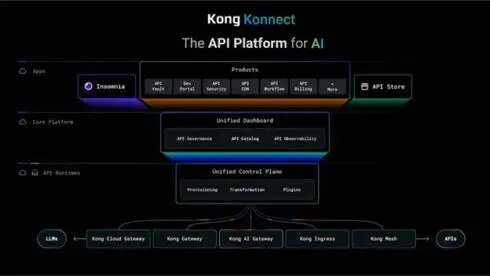

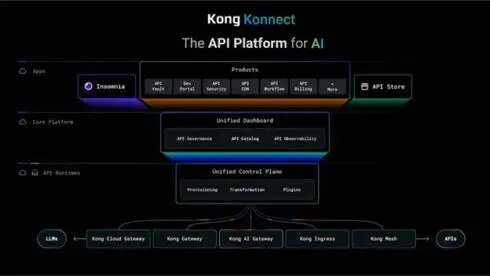

Kong is hosting its API Summit 2024 event, and has made announcements about several improvements to its platforms. Key highlights are updates to Kong Konnect, its API management platform, which also includes new versions of Kong Insomnia, Kong Gateway, and Kong AI Gateway.

The latest updates to Kong Konnect help companies further prepare their API infrastructure to handle AI use cases.

“There is no AI without APIs, and the latest version of Kong Konnect delivers the essential infrastructure for both. We aim to give businesses the tools to manage and scale their API traffic securely, helping drive innovation faster than ever before,” said Augusto Marietti, CEO and co-founder of Kong Inc. “Kong Konnect provides a unified API platform for building, running and governing GenAI applications.”

This update includes Konnect Service Catalog, which provides a single source of truth for APIs and services, which helps companies manage shadow APIs by allowing them to get rid of undiscovered or unused APIs. The Service Catalog also features a Scorecard that assesses how compliance a service is with defined criteria.

Konnect Dedicated Cloud Gateways are also now available on Azure and new AWS regions. According to the company, this added availability enables organizations to deploy and manage their APIs across multiple clouds and maintain enterprise-grade SLAs.

Kong Insomnia 10, which is an API design and testing tool, adds unlimited collection runs, AI Runner for developing GenAI apps, and Invite Control, which ensures that API assets can only be accessed by authorized users.

Other features in this release of Kong Konnect include Serverless Gateways, a centralized and cloud-based repository for managing API configurations, and advanced API and AI analytics.

The update also introduces versions 3.8 of both Kong Gateway and Kong AI Gateway. Kong Gateway 3.8 adds Incremental Configuration Updates, which significantly reduces memory and CPU usage, and enhanced OpenTelemetry support.

Kong AI Gateway 3.8 adds several semantic reasoning capabilities, including Semantic Caching, which recognizes when different prompts represent the same question, resulting in faster response times. For instance, “how long does it take to cook pasta?” and “how long does it take to cook spaghetti?” would be responded to with the same cached response.

According to the company, the ability to reply with cached responses cuts down on processing times and reduces computational overhead.

Another new feature in this update is Semantic Prompt Guard, which enables AI Gateway to understand the “essence” of a request and block inappropriate prompts. Normally, those prompts are sorted out by identifying specific keywords, and this feature eliminates the need for that.

The 3.8 release also introduces Semantic Routing, which selects the most relevant LLM based on a developer’s requirements.

“Kong AI Gateway 3.8 is a significant step forward in enabling organizations to fully harness the power of AI,” said Marco Palladino, co-founder and CTO of Kong. “By introducing Semantic Intelligence into our AI Gateway, we are addressing the most pressing challenges faced by enterprises leveraging GenAI today — speed, cost and security — while dramatically improving the developer experience.”