Announcing new features designed to further enhance data privacy and security capabilities for customers building and scaling with Azure OpenAI.

In today’s rapidly evolving digital landscape, data privacy and security are paramount for enterprises. As organizations increasingly leverage AI to drive innovation, Microsoft Azure OpenAI Service offers robust enterprise controls that meet the highest standards of security and compliance. Built on Azure’s backbone, Azure OpenAI can integrate with your organization’s tools to help ensure you have the right guardrails in place. That is why customers like KPMG, Heineken, Unity, PWC, and more have chosen Azure OpenAI for their generative AI applications.

We are excited to announce new features designed to further enhance data privacy and security capabilities for over 60,000 customers building and scaling with Azure OpenAI.

Announcing Azure OpenAI Data Zones

From Day 0, Azure OpenAI provided data residency with control of data processing and storage across our existing 28 different regions. Today, we are announcing Azure OpenAI Data Zones for the European Union and United States. Variances in model-region availability have historically required customers to manage multiple resources and route traffic between them, slowing development and complicating management. This feature simplifies generative AI application management by offering the flexibility of regional data processing while maintaining data residency within those geographic boundaries, giving customers easier access to models and higher throughput.

Enterprises rely on Azure for data privacy and data residency

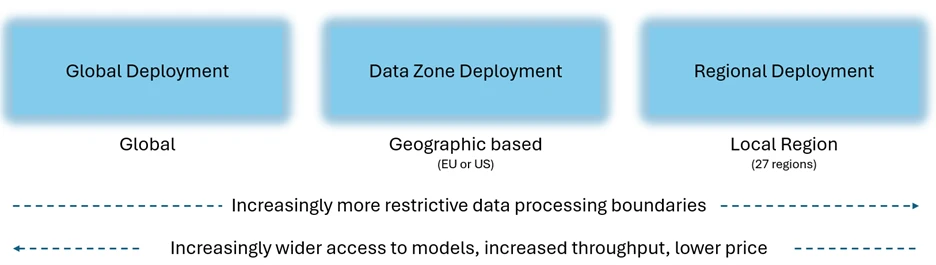

The new Data Zones feature builds on the existing strength of Azure OpenAI’s data processing and storage options. Azure OpenAI provides customers with the choices between Global, Data Zone, and Regional deployments. This allows customers to improve throughput, model availability, and simplified management. With all deployment selections, data is stored at rest within the Azure chosen region of your resource.

- Global deployments: Available in over 25 regions, this option offers access to all new models (including o1 series) at the lowest price with the highest throughputs. Data is stored at rest within the customer selected region of the Azure resource, while the global backbone ensures optimized response times.

- Data Zones: Introducing Data Zones for customers who need greater data processing assurances while accessing the latest models, combined with the flexibility of cross region load balancing within the customer selected geographic boundary. The United States Data Zone spans all Azure OpenAI regions within the United States. The European Union Data Zone spans all Azure OpenAI regions located within the European Union member states. The new Azure Data Zones deployment type will be available in the coming month.

- Regional deployments: For the strictest levels of data control, regional deployments ensure processing and storage occur within the resource’s geography. This option offers the most limited model availability compared to Global and Data Zone deployments.

Since our launch, customers get granular control over their data processing and storage locations upholding Azure’s Data residency promises.

Securely extending generative AI applications with your data

Azure OpenAI integrates effortlessly with hundreds of Azure and Microsoft services that enable you to extend your solution with your existing data storage and search capabilities. The two most common extensions are Azure AI Search and Microsoft Fabric.

Azure AI search provides secure information retrieval at scale over customer-owned content in traditional and generative AI applications. This allows document search and data exploration to feed query results into prompts, grounding generative AI applications on your data while maintaining Azure scale, security, and control.

Microsoft Fabric’s unified data lake, OneLake enables access to organizations entire multi-cloud data estate from a single, intuitively organized data lake. Bringing all company data into a single data lake simplifies the integration of data to fuel your generative AI application, while upholding company data governance and compliance controls.

Enterprises rely on Azure for safety, security, and compliance

Content Safety by Default

Azure OpenAI comes integrated with Azure AI Content Safety by default at no additional cost, ensuring that both prompts and completions are filtered through an ensemble of classification models to detect and prevent harmful content. Azure provides the widest range of content safety tools, including the new prompt shield and groundedness detection capabilities. Customers with advanced requirements can customize these settings, including adjusting harm severity or enabling asynchronous modes for lower latency.

Secure access with Managed Identity through Entra ID

Microsoft recommends securing your Azure OpenAI resources with Microsoft Entra ID to create zero-trust access controls, preventing identity attacks and managing access to resources. By implementing least-privilege principles, enterprises can ensure stringent security policies. Entra ID also eliminates the need for hard-coded credentials, further enhancing security across the solution.

Additionally, Managed Identity integrates seamlessly with Azure role-based access control (RBAC), providing precise control over resource permissions.

Enhanced data security with customer-managed key encryption

The data stored by Azure OpenAI in your subscription is encrypted by default using a Microsoft-managed key. To further enhance the security of your application, Azure OpenAI enables customers to bring their own Customer-Managed Keys for encrypting data stored on the Microsoft-managed resources, including Azure Cosmos DB, Azure AI Search, or your Azure Storage account.

Enhanced security with private networking

Secure your AI applications by isolating them from the public internet using Azure virtual networks and Azure Private Link. This setup ensures that traffic between services remains within Microsoft’s backbone network, allowing secure connections to on-premises resources via ExpressRoute, VPN tunnels, and peered virtual networks.

Last week we also announced the release of private networking support for the AI Studio—enabling users to use advanced “add your data” features via our Studio UI without sending traffic over a public network.

Commitment to compliance

We are committed to supporting the compliance requirements of our customers across regulated industries including healthcare, finance, and government. Azure OpenAI already meets a wide range of industry standards and certifications, including HIPAA, SOC 2, and FedRAMP, ensuring that enterprises across industries can trust their AI solutions to remain secure and compliant.

Enterprises trust Azure reliability for production level scale

The Azure OpenAI service is trusted by many of the world’s largest generative AI applications today including GitHub Copilot, Microsoft 365 Copilot, and Microsoft Security Copilot. Our own product teams and customers choose Azure OpenAI because we offer an industry leading 99.9% reliability SLA on both the Paygo Standard and Provisioned Managed offerings. We are making that even better with a new latency SLA.

Announcing new features: Latency SLAs with Provisioned-Managed

Enabling customers to scale up with their product growth without compromising on latency is important to ensure the quality of your customer experience. Our Provisioned-Managed (PTUM) deployment type already offers the greatest scale with low latency. We are excited to announce a formal latency service level agreements (SLAs) within PTUM, providing assurances for performance at scale. These SLAs will roll out in the coming month. Bookmark our product newsfeed for more updates and enhancements.

Azure OpenAI continues to be the generative AI platform of choice that customers can trust, building on Azure’s long history of robust data privacy and residency, safety and security, and reliability that customers rely on every day. The safety and security of your applications remains our highest priority.

Check out additional AI resources