In the past few weeks, multiple “autonomous background coding agents” have been released.

- Supervised coding agents: Interactive chat agents that are driven and steered by a developer. Create code locally, in the IDE. Tool examples: GitHub Copilot, Windsurf, Cursor, Cline, Roo Code, Claude Code, Aider, Goose, …

- Autonomous background coding agents: Headless agents that you send off to work autonomously through a whole task. Code gets created in an environment spun up exclusively for that agent, and usually results in a pull request. Some of them also are runnable locally though. Tool examples: OpenAI Codex, Google Jules, Cursor background agents, Devin, …

I gave a task to OpenAI Codex and some other agents to see what I can learn. The following is a record of one particular Codex run, to help you look behind the scenes and draw your own conclusions, followed by some of my own observations.

The task

We have an internal application called Haiven that we use as a demo frontend for our software delivery prompt library, and to run some experiments with different AI assistance experiences on software teams. The code for that application is public.

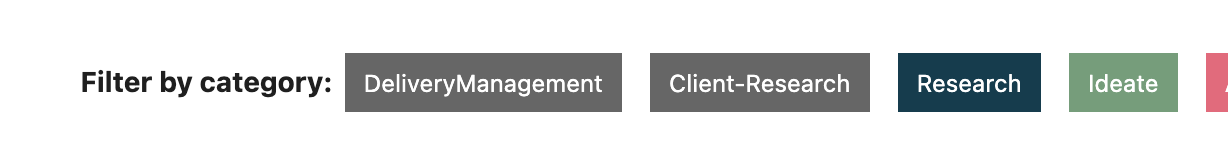

The task I gave to Codex was regarding the following UI issue:

Actual:

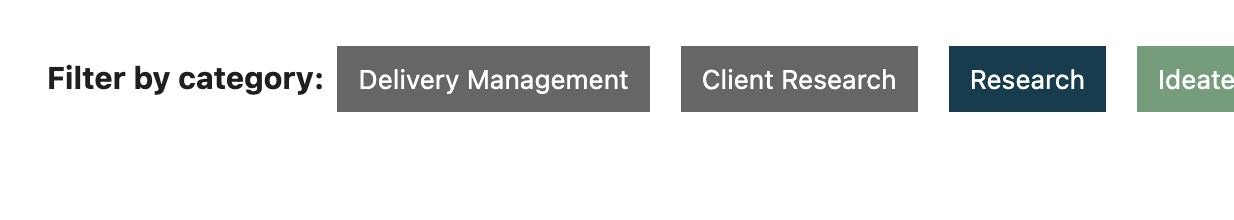

What I wanted from the agent:

Prompt: When we create the filter labels on the dashboard, we create human readable labels based on the categories we have. "client-research" is turned into "Client-Research", "deliveryManagement" into "DeliveryManagement". Improve the sophistication of the "category-to-human-readable" logic so that the labels are appearing to the user as "Client Research" and "Delivery Management"

This task is

- Relatively small

- A typical “cosmetics” task that is not super urgent and might get deprioritised again and again

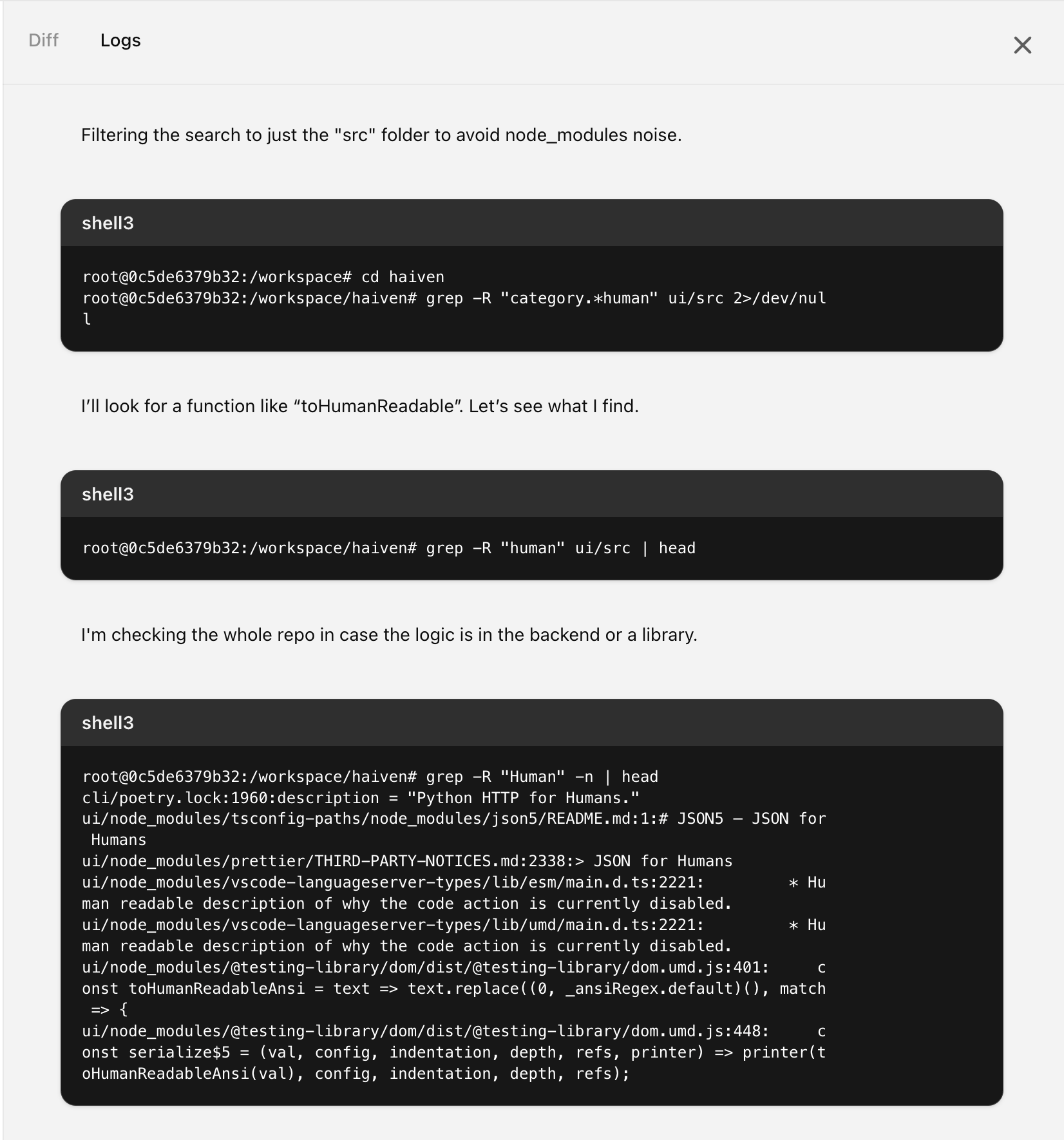

Agent logs

I’m sharing the detailed logs here so that you can get an impression of what is going on under the hood.

Here’s what the logs view looks like in Codex:

Collecting information first

The first 17 log entries were all about reading AGENTS.md and README.md:

- “I need to check the AGENTS.md in the repository root for instructions.”

- looks for AGENTS.md, but I had actually called it AGENT.md, which led to some back and forth until it figured that out

- reads README.md

- Looks at .pre-commit, finds a test setup

The following tables list the rest of the log messages verbatim. After each of those messages, the agent executed a command, for some of them I added that actual command for context. I’m sure that the log messages are just summaries of the tokens that were actually going on behind the scenes, because the messages are way too short to represent the full “thinking” the model must have been going through.

Searching for the relevant code with lots of grep

| Message | Command (samples) | Notes |

|---|---|---|

| I need to search for “category-to-human-readable” to locate where to make changes for the frontend labels. | grep -R "human readable" -n |

|

| I’ll look for any mentions of “category” in the project. | ||

| Grep output is massive because of node_modules; I’ll limit it to src instead. | ||

| I’ll search for “to human” in the src directory to find relevant references. | ||

| I’ll open and check index.js to see what’s inside. | ||

| I’m checking if the category-to-human-readable transformation is handled in _navigation_items or elsewhere. | ||

| I’m going to look up “human readable” to get more info on the topic. | grep -R "human-readable" -n ui/src \| head |

|

| I wonder if there’s a function for converting categories into something more readable. | ||

| Filtering the search to just the “src” folder to avoid node_modules noise. | second time it’s running into this, didn’t ‘remember’ | |

| I’ll look for a function like “toHumanReadable”. Let’s see what I find. | ||

| I’m checking the whole repo in case the logic is in the backend or a library. | grep -R "Human" -n \| head |

|

| I’ll limit the scope to app/ and ui/src to avoid the large node_modules folder. | third time it’s running into this, didn’t ‘remember’ | |

| I think the logic might be missing, so I’ll check _navigation_items and other modules for categories. | grep -R "human" app -n \| head |

|

| I think it’s time to look a bit deeper. Let’s dive in and explore. | grep -R "human" app \| wc -l |

To “look deeper”, it searches for “human” again, but pipes it into wc instead of head? |

Finally, it’s getting closer! The labels were previously crudely capitalized via CSS, it finds that

| Message | Command (samples) | Notes |

|---|---|---|

| I’m checking for references to “capitalize” or category transformation in the frontend. | Output: |

|

| I’ll check around the Tag section in index.js. | ||

| Tag display capitalizes words incorrectly due to hyphen or camelCase; I’ll look at filter categories. |

Now it’s starting to check for more relevant code in the app folder, where the backend is. It doesn’t find anything, which is correct, there’s nothing relevant there

| Message | Command (samples) | Notes |

|---|---|---|

| I’ll check the repo for how categories are formatted, focusing on hyphens and camelCase. | grep -R "categories" -n app \| head |

|

| Time to try a new search! | grep -R "client" -n app \| head |

|

| I’ll take a closer look at the prompts in the test data. | grep -R "categories:" -n app/tests \| head |

Why? |

| It looks like the test data has limited categories. I’ll check the docs for any differences. | grep -R "client-research" -n |

|

| I’ll start by opening the files to take a closer look. | sed -n '1,100p' ui/src/app/_navigation_items.js |

Now it’s going back to searching in the ui folder – it found the CSS previously, and is now looking for existing code that it can reuse to solve the task. That’s great, because we indeed already have a similar function, and it finds it:

| Message | Command (samples) | Notes |

|---|---|---|

| I need a function to convert tags like “client-research” to “Client Research” for filters. | grep -R "to.*Readable" -n ui/src \| head |

|

| I’m opening the toReadableText function inside _dynamic_data_renderer.js to explore it. | ||

| I’m moving through the page to get the details I need. |

It now finds a gap in the existing function’s implementation:

| Message | Command (samples) | Notes |

|---|---|---|

| I see that hyphens aren’t handled by the function. I need to adjust it for hyphens. | ||

| I’ll modify toReadableText to handle hyphens, ensuring proper capitalization and spacing. Let’s check tests! | It actually didn’t check any tests | |

| I’ll check _dynamic_data_renderer.js to examine toReadableText and modify it for hyphen-case. | ||

| I’ll check tests/_sidebar.test.js to review the related categories there. | ||

| I’ll check other files to see if toReadableText appears anywhere. | ||

| I’ll check if _sidebar or others contain a toReadableText function. |

Update the test for the function that it wants to change:

| Message | Command (samples) | Notes |

|---|---|---|

| I’ll adjust the function to handle hyphen-case, then update tests to reflect that. | ||

| I’ll check the test file after line 36 to ensure hyphen-case is covered. | ||

| I’ll scroll through lines 60 to 120 to examine the rest of the file. | ||

| I’m scrolling the rest to capture all the relevant information. | ||

| I’ll add tests for hyphen-case and update toReadableText, including modifying index.js for categories. | ||

| I’ll adjust _dynamic_data_renderer.js and index.js to use toReadableText and update tests. | git status --short |

You can configure some environment startup commands in Codex, I had configured that to be yarn install, which led to the following:

| Message | Command (samples) | Notes |

|---|---|---|

| There are untracked .yarn/ and .yarnrc files. I’ll check and clean them up. | ||

| I’ll check the root of ‘ui’ and remove any unnecessary Yarn 4 entries. | ||

| I’ll look at the commit history to see if those .yarn files were added later. | git log --oneline -n 1 |

|

| I’ll review the repository’s .gitignore to confirm if Node modules are excluded. | grep yarn .gitignore |

|

| I see no entry for .yarn or .yarnrc. I’ll check and remove them if needed. | cat ui/.gitignore |

|

| I’ll check if I can remove .yarn or .yarnrc files since they shouldn’t be committed. | ||

| It looks like yarn.lock changes were made. Time to reset to HEAD. |

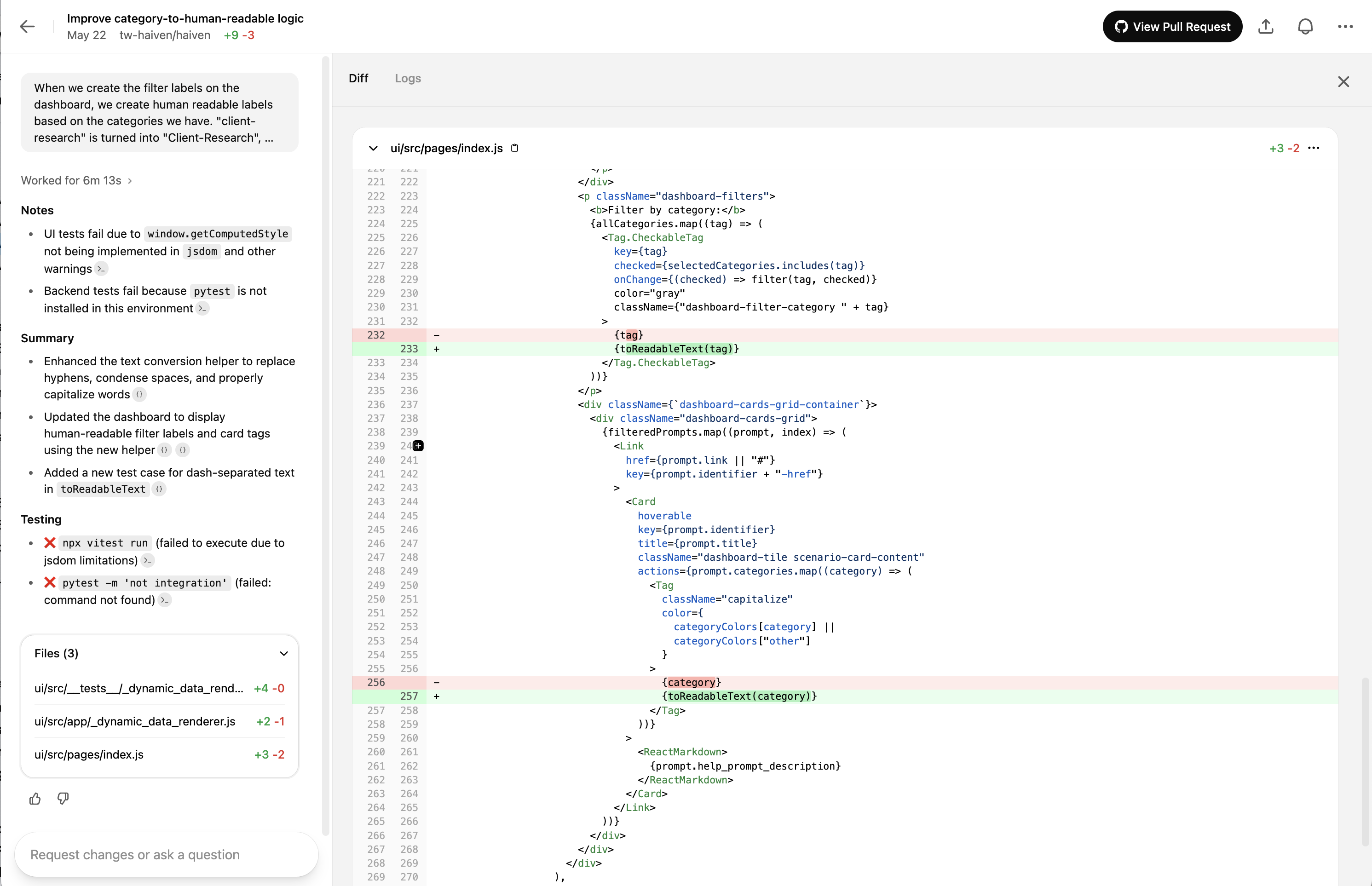

Actual code changes:

| Message | Command (samples) | Notes |

|---|---|---|

| Time to modify the code. I’ll begin the necessary updates now. | sed -n '1,30p' ... |

|

| I’m going to add a hyphen to the necessary part of the code. | ||

| I’m going to make changes to the index.js file now. | ||

| I’ll import toReadableText and start using it in the code. | ||

| I’ll update index.js to import toReadableText and adjust the _dynamic_data_renderer tests. | ||

| I need to replace {tag} with {toReadableText(tag)} for better rendering. | ||

| I’ll take a look at the differences to better understand the changes. | git diff |

|

| I’ll review the changes in index.js to see what’s been modified. | ||

| I’ll add a new test for hyphenated cases in _dynamic_data_renderer.test.js. | ||

| I’ll consider inserting a test after line 35. |

Running our code linter next, nice:

| Message | Command (samples) | Notes |

|---|---|---|

| I’ll format the code using Prettier as instructed. | cd ui && npx prettier -w ... |

Prettier is mentioned in the AGENTS.md. It seems to run prettier successfully, though none of the files were changed |

| I’ll review the logs to gather more information. |

Trying to run the tests – unsuccessfully:

| Message | Command (samples) | Notes |

|---|---|---|

| I’ll run the yarn tests to check if everything’s working fine. | ||

| Tests might’ve been cached or need network—output’s empty, so I’ll check the log. | ||

| The lockfile isn’t updated. I’ll try running ‘yarn install’ since the environment allows it. | ||

| The environment is offline, so yarn install can’t be run, but I see a node_modules folder. | ||

| I see node_modules are already installed. I’ll check the log for why tests failed. | ||

| The error happens because Yarn 4 relies on .yarn and .yarnrc, which we removed. | ? | |

| It looks like .yarnrc.yml isn’t tracked. I’ll also check .yarn for tracking. | ||

| I’m double-checking the package.json to see if “haiven-ui” is the correct name. | ? | |

| I’ll check the Node version to confirm if it’s 18 or 20 by running node -v. | weird, the node version specified in package.json is 22.6 | |

| I think switching to npx vitest run could fix the error. Let’s try that. | ||

| The output is long—now I’ll capture the last summary lines. | Error: Not implemented: window.getComputedStyle(elt, pseudoElt) |

|

| The output is too long, so I’ll extract the key points for a summary. |

Going on a tangent about setting up the venv, which is totally unnecessary because it only plans changes for frontend code:

| Message | Command (samples) | Notes |

|---|---|---|

| The tests failed due to case differences, but now I’ll activate the virtual environment. | AGENTS.md contains instructions to activate venv for the Python backend, but we’re in the frontend here, so… | |

| Hmm, the .venv isn’t here. I should check if it needs creation or already exists. | ||

| It looks like the .venv directory is missing. Let’s review AGENT’s instructions carefully. | ||

| The environment seems offline, but there might be a script to set up dependencies locally. | ||

| I can’t run “poetry install” offline, but I’ll check the .cursor folder for dependencies. | ||

| I can’t run backend tests with the current setup, but I’ll try pytest anyway. |

Gave up on the tests, now wrapping up the changes:

| Message | Command (samples) | Notes |

|---|---|---|

| Tests failed due to missing dependencies. Now, I’ll check status and proceed with committing. | ||

| I think the .yarnrc.yml was left over from a previous yarn install. Removing it. | ||

| I’ll grab the relevant file lines for the citations now. | ||

| I’ll get the surrounding line range for the changes in index.js. | nl -ba ui/src/pages/index.js \| sed -n '12,28p' |

|

| I’ll grab the relevant lines around those changes now. | ||

| It’s time to finalize the PR message and verify the commit. | ||

| UI tests failed due to environment issues. I’ll reference the last failure lines. Done! |

Here is the full pull request created by Codex.

Observations

Brute text search

I found it interesting to see that Codex, and most of the other coding assistants I’m using, are resorting more and more to simple text search to find relevant code. In the log above you see how Codex goes through a bunch of search terms with grep, to find relevant pieces of code (“human”, “human readable”, “humanReadable”, …). The reason why I find it interesting is because there have been a lot of seemingly more sophisticated code search mechanisms implemented, like semantic search over codebase indices with vectors / embeddings (Cursor, GH Copilot, Windsurf), or using the abstract syntax tree as a starting point (Aider, Cline). The latter is still quite simple, but doing text search with grep is the simplest possible.

It seems like the tool creators have found that this simple search is still the most effective after all – ? Or they’re making some kind of trade-off here, between simplicity and effectiveness?

The remote dev environment is key for these agents to work “in the background”

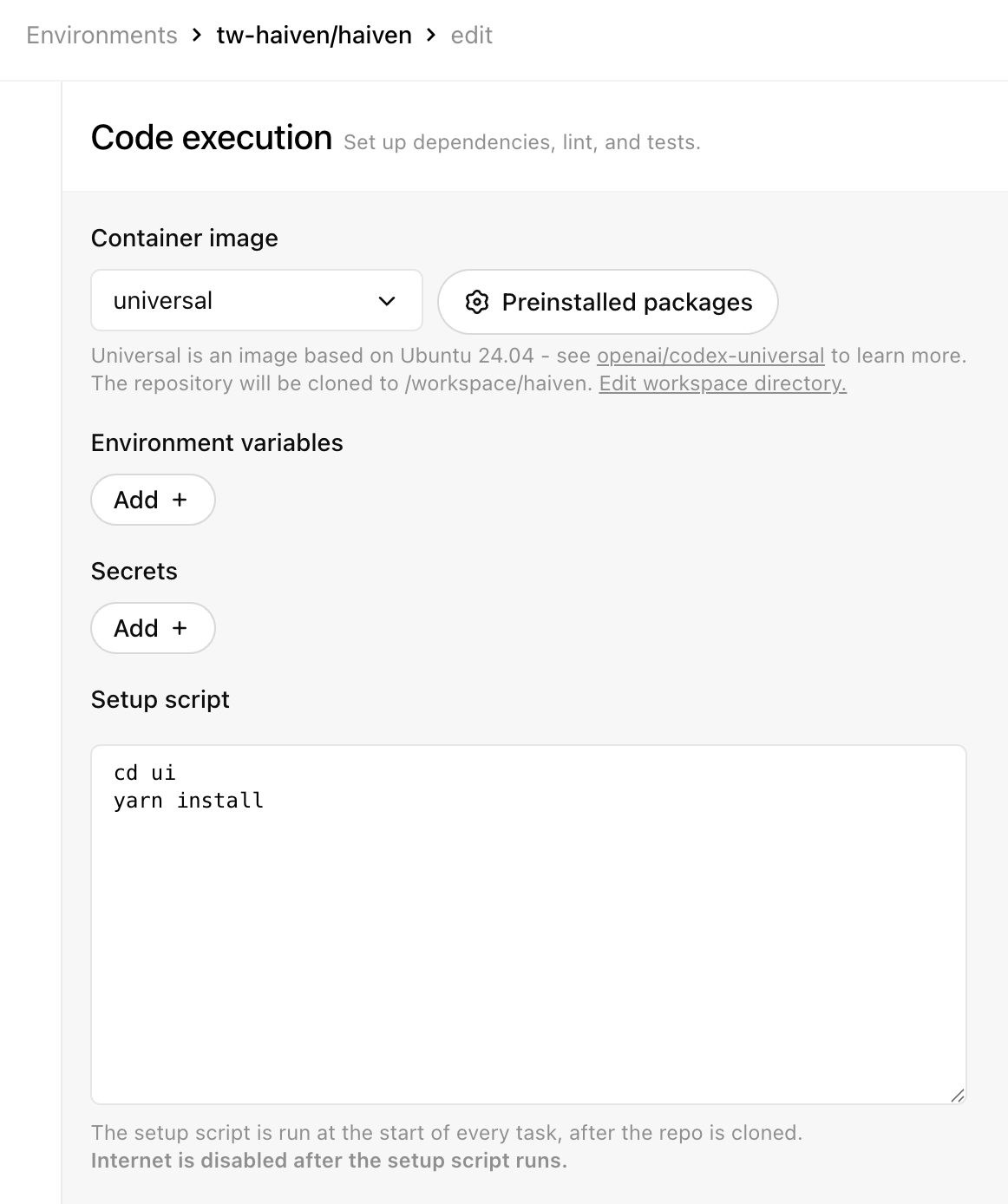

Here is a screenshot of Codex’s environment configuration screen (as of end of May 2025). As of now, you can configure a container image, environment variables, secrets, and a startup script. They point out that after the execution of that startup script, the environment will not have access to the internet anymore, which would sandbox the environment and mitigate some of the security risks.

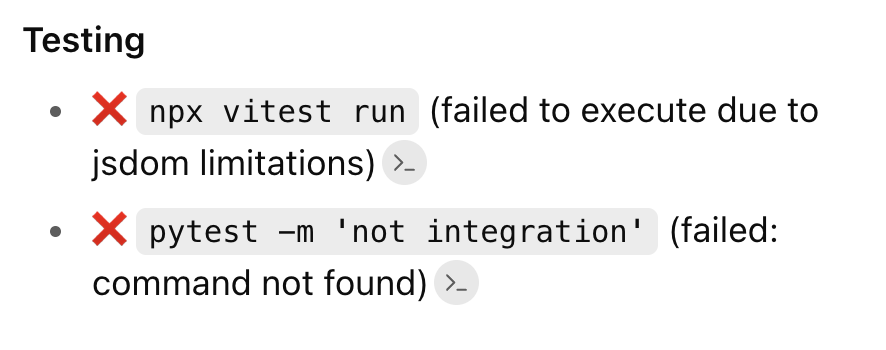

For these “autonomous background agents”, the maturity of the remote dev environment that is set up for the agent is crucial, and it’s a tricky challenge. In this case e.g., Codex didn’t manage to run the tests.

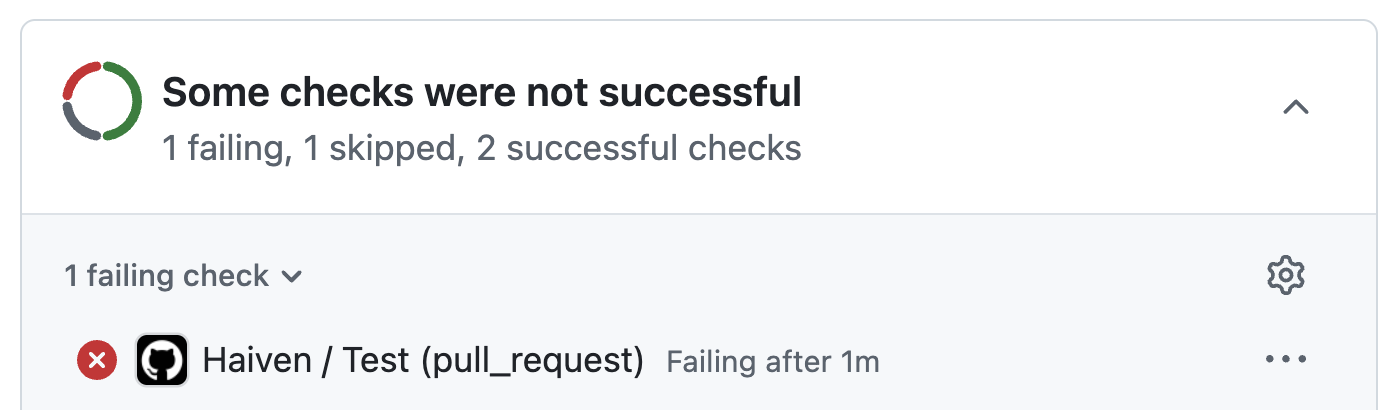

And it turned out that when the pull request was created, there were indeed two tests failing because of regression, which is a shame, because if it had known, it would have easily been able to fix the tests, it was a trivial fix:

This particular project, Haiven, actually has a scripted developer safety net, in the form of a quite elaborate .pre-commit configuration. () It would be ideal if the agent could execute the full pre-commit before even creating a pull request. However, to run all the steps, it would need to run

- Node and yarn (to run UI tests and the frontend linter)

- Python and poetry (to run backend tests)

- Semgrep (for security-related static code analysis)

- Ruff (Python linter)

- Gitleaks (secret scanner)

…and all of those have to be available in the right versions as well, of course.

Figuring out a smooth experience to spin up just the right environment for an agent is key for these agent products, if you want to really run them “in the background” instead of a developer machine. It is not a new problem, and to an extent a solved problem, after all we do this in CI pipelines all the time. But it’s also not trivial, and at the moment my impression is that environment maturity is still an issue in most of these products, and the user experience to configure and test the environment setups is as frustrating, if not more, as it can be for CI pipelines.

Solution quality

I ran the same prompt 3 times in OpenAI Codex, 1 time in Google’s Jules, 2 times locally in Claude Code (which is not fully autonomous though, I needed to manually say ‘yes’ to everything). Even though this was a relatively simple task and solution, turns out there were quality differences between the results.

Good news first, the agents came up with a working solution every time (leaving breaking regression tests aside, and to be honest I didn’t actually run every single one of the solutions to confirm). I think this task is a good example of the types and sizes of tasks that GenAI agents are already well positioned to work on by themselves. But there were two aspects that differed in terms of quality of the solution:

- Discovery of existing code that could be reused: In the log here you’ll find that Codex found an existing component, the “dynamic data renderer”, that already had functionality for turning technical keys into human readable versions. In the 6 runs I did, only 2 times did the respective agent find this piece of code. In the other 4, the agents created a new file with a new function, which led to duplicated code.

- Discovery of an additional place that should use this logic: The team is currently working on a new feature that also displays category names to the user, in a dropdown. In one of the 6 runs, the agent actually discovered that and suggested to also change that place to use the new functionality.

| Found the reusable code | Went the extra mile and found the additional place where it should be used |

|---|---|

| Yes | Yes |

| Yes | No |

| No | Yes |

| No | No |

| No | No |

| No | No |

I put these results into a table to illustrate that in each task given to an agent, we have multiple dimensions of quality, of things that we want to “go right”. Each agent run can “go wrong” in one or multiple of these dimensions, and the more dimensions there are, the less likely it is that an agent will get everything done the way we want it.

Sunk cost fallacy

I’ve been wondering – let’s say a team uses background agents for this type of task, the types of tasks that are kind of small, and neither important nor urgent. Haiven is an internal-facing application, and has only two developers assigned at the moment, so this type of cosmetic fix is actually considered low priority as it takes developer capacity away from more important things. When an agent only kind of succeeds, but not fully – in which situations would a team discard the pull request, and in which situations would they invest the time to get it the last 20% there, even though spending capacity on this had been deprioritised? It makes me wonder about the tail end of unprioritised effort we might end up with.