Marketers have long dreamed of one-on-one customer engagement, but crafting the volume of messages required for personalized engagement at that level has been a major challenge. While many organizations aim for more personalized marketing, they often target large groups of thousands or millions of customers within which a large amount of diversity still exists. Although this is better than a generic, one-size-fits-all approach, organizations would prefer to be more precise, if only they had the bandwidth to engage at a more granular level.

As mentioned in our previous blog, generative AI can help ease the challenge of creating highly tailored marketing content. While achieving true one-on-one engagement may still be difficult due to some of the limitations of the technology in its current state, combining customer details with sample content and smart prompt engineering can be used to cost-effectively create a manageable volume of tailored variants. Applying independent models to evaluate the generated content before it then heads to a final review with a knowledgeable marketer can go a long way to ensuring this finer-grained content meets organizational standards while being more precisely aligned with the needs and preferences of a specific subsegment.

But how do we turn this into a reliable workflow? And critically, how do we actually get all these content variants to the intended customers using our existing marketing technologies? In this post, we continue to build on the holiday gift guide scenario introduced in the prior blog and demonstrate an end-to-end workflow for email-based content delivery with Amperity and Braze, two widely adopted platforms in the enterprise MarTech stack.

Generating the Content

In our previous blog, we worked through how to craft a prompt capable of triggering a generative AI model to create a marketing email message tailored to the interests of an audience subsegment. The prompt employed a sample email message to serve as a guide and then tasked the model with altering the content to resonate better with an audience with specific price sensitivities and activity preferences (Figure 1).

Figure 1. The prompt developed for the creation of a personalized holiday gift guide

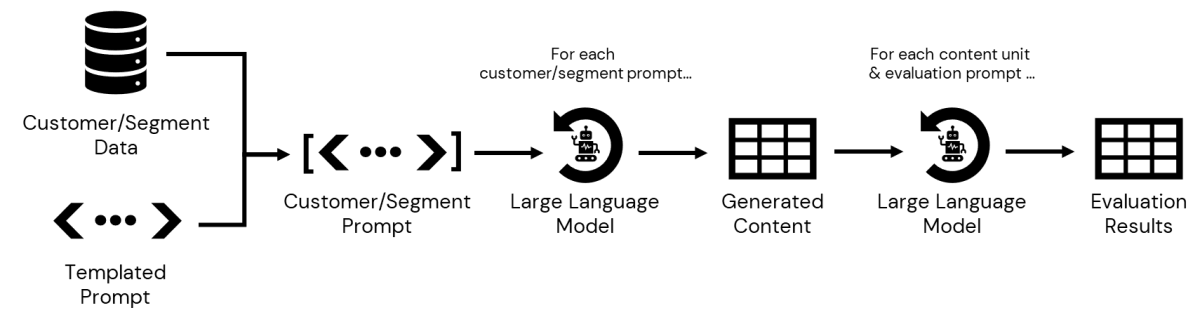

To apply this prompt at scale, we need to remove customer-specific elements (such as product subcategory and price preferences in this example) and insert placeholders where these elements can be inserted as needed, creating a prompt template. Customer-specific details can then be inserted into the templated prompt (housed in the Databricks environment) with customer details housed in the customer data platform (CDP).

As we are using Amperity for our demonstration CDP, integration is a fairly straightforward process. Using the Amperity Bridge capability, built using the open-source Delta Sharing protocol supported by the Databricks environment, we simply create a connection between the two platforms and expose the appropriate information across (Figure 2). (The detailed steps on setting up the bridge connection are found here.)

Figure 2. A video walkthrough of how to connect to Databricks via the Amperity Bridge

Our next step is to query the data stored in the CDP, accessible within Databricks, to gather details for each subsegment. Once these are defined, we can pass the information associated with each into our prompt to generate customized messages. Once persisted, we can then iterate over the output, evaluating each generated message against various criteria before that content and the evaluation results are presented to a marketer for final review and approval (Figure 3).

The end result of this process is a table of content variants, one for each combination of preferred price point and product subcategory along with a table of evaluation outputs for each evaluation step. The data is now ready for marketer review.

NOTE For a detailed, technical implementation of the workflow in Figure 3, please check out this notebook.

Delivering the Content

With our content variants created, we can turn our attention to delivery. The exact details of how to go about this step are dependent upon the specific delivery platform you are using. For our demonstration, we will take a look at how this content can be delivered using Braze, a leading content delivery platform widely adopted across marketing organizations.

At a high-level, the steps involved with delivering this content via Braze are as follows:

- Push content variants to Braze

- Identify the audience members to receive the content

- Connect the audience members with specific content variants

Push Content Variants to Braze

Within Braze, the content employed as part of a campaign is defined as a Braze Catalog. Using Braze Cloud Data Ingestion, this content can be read from Databricks so long as the content is presented within a table or view containing a unique identifier (ID), a datetime field indicating when the content was last updated (UPDATED_AT), and a JSON payload (PAYLOAD) with title and body elements that will be used construct the delivered content.

To illustrate how might construct this dataset, let’s assume the output of our content generation workflow (as illustrated in Figure 4) resulted in a content table with the following structure, where preferred_price_point and holiday_preferred_subcategory represent the subsegment details unique to each record in the table:

We might define a view against this table to structure it for deployment as a Braze Catalog as follows:

Within Braze, we can now define a catalog for this content (Figure 3).

Figure 3. The Braze Catalog intended to house our generated content

We then configure a Cloud Data Ingestion (CDI) sync, connecting the Databricks view to the Braze Catalog structure and configure it for synchronization, ensuring it stays up to date (Figure 4).

Figure 4. The Cloud Data Ingestion (CDI) sync mapping the Braze Catalog to the Databricks content view

Identify the Audience Members

We now need the details for the individuals to whom we intend to deliver this content. As our goal is to deliver this content via email, we will need the email addresses of the targeted individuals. Elements like first and last name may also be needed so that the content can be addressed to the recipient in a more personalized manner. And we will need details on how individuals are aligned with product subcategory and price preferences. This last element will be essential to connect audience members with the specific content variations housed in the Braze Catalog.

Because we are using Amperity as our CDP, pushing this information to Braze is a simple matter of defining the pool of recipients as an audience and using the Amperity connector to push these details across (Figure 5).

Connect Audience Members with Content Variants

With all elements in place within Braze, we now can connect audience members with specific content variants and schedule delivery. This is done within Braze using Liquid templating, an open-source template language developed by Shopify and written in Rudy. This language is highly accessible to Marketers and enables them to define customizable content for large-scale distribution.

Getting Started

Databricks is increasingly being used within enterprises as the core hub for data and analytics capabilities. With built-in and highly extensible generative AI capabilities as well as deep integration into a variety of complementary platforms such as the Amperity CDP and Braze content delivery platform, organizations are building a wide range of applications such as the one demonstrated in this blog with Databricks at the center.

If you’d like to learn more about how Databricks can be used to help your Marketing teams create and deliver more personalized content to your customers, reach out and let’s discuss the many options available to developing solutions using the platform.

This process leverages several key components and utilizes the following workflow:

- Content Structure & Ingestion

- Amperity Audience Activation – Amperity syncs the audience of users for whom the content was created to Braze for precise targeting.

- Campaign Construction & Liquid Templating

Step 3: Campaign Construction and Liquid Templating

The final stage involves building the Braze campaign.

Liquid templating plays a pivotal role here, allowing for dynamic insertion of the generated content based on user attributes stored within Braze profiles. These attributes, synced via the Amperity activation, are referenced to create a matching Catalog row ID. This ID is then used to fetch and insert the generated subject line and body copy into the email.

3a. Email Subject Line

Using Liquid filters, we combine the `preferred_price_point` and `holiday_preferred_subcategory` attributes, separated by an underscore, to create a local `identifier` variable:

This dynamically generated `identifier` is then used to reference the corresponding ID in the HolidayGenAI catalog:

Figure 5. Screenshot of send settings w/ Liquid

For a user with a `preferred_price_point` of high and `holiday_preferred_subcategory` of Hiking, the resulting Liquid output in the email’s subject line will be derived from the title of the matching catalog item:

Figure 6. Catalog item showing the relevant row

3b. Email Body Copy

We can follow the same approach for pulling the generated content into the body of the email.

The final result is an email that dynamically pulls the generative email content, personalized to each user’s preferred price point and subcategory, driving better engagement and higher conversion rates.

Figure 7. Email screenshot

This use case could expand further to include adding generative images or even using Connected Content to query a Databricks endpoint directly at time-of-send.

For a detailed, technical implementation of the workflow in Figure 3, please check out this notebook.