Since 2015, Microsoft has recognized the very real reputational, emotional, and other devastating impacts that arise when intimate imagery of a person is shared online without their consent. However, this challenge has only become more serious and more complex over time, as technology has enabled the creation of increasingly realistic synthetic or “deepfake” imagery, including video.

The advent of generative AI has the potential to supercharge this harm – and there has already been a surge in abusive AI-generated content. Intimate image abuse overwhelmingly affects women and girls and is used to shame, harass, and extort not only political candidates or other women with a public profile, but also private individuals, including teens. Whether real or synthetic, the release of such imagery has serious, real-world consequences: both from the initial release and from the ongoing distribution across the online ecosystem. Our collective approach to this whole-of-society challenge therefore must be dynamic.

On July 30, Microsoft released a policy whitepaper, outlining a set of suggestions for policymakers to help protect Americans from abusive AI deepfakes, with a focus on protecting women and children from online exploitation. Advocating for modernized laws to protect victims is one element of our comprehensive approach to address abusive AI-generated content – today we also provide an update on Microsoft’s global efforts to safeguard its services and individuals from non-consensual intimate imagery (NCII).

Announcing our partnership with StopNCII

We have heard concerns from victims, experts, and other stakeholders that user reporting alone may not scale effectively for impact or adequately address the risk that imagery can be accessed via search. As a result, today we are announcing that we are partnering with StopNCII to pilot a victim-centered approach to detection in Bing, our search engine.

StopNCII is a platform run by SWGfL that enables adults from around the world to protect themselves from having their intimate images shared online without their consent. StopNCII enables victims to create a “hash” or digital fingerprint of their images, without those images ever leaving their device (including synthetic imagery). Those hashes can then be used by a wide range of industry partners to detect that imagery on their services and take action in line with their policies. In March 2024, Microsoft donated a new PhotoDNA capability to support StopNCII’s efforts. We have been piloting use of the StopNCII database to prevent this content from being returned in image search results in Bing. We have taken action on 268,899 images up to the end of August. We will continue to evaluate efforts to expand this partnership. We encourage adults concerned about the release – or potential release – of their images to report to StopNCII.

Our approach to addressing non-consensual intimate imagery

At Microsoft, we recognize that we have a responsibility to protect our users from illegal and harmful online content while respecting fundamental rights. We strive to achieve this across our diverse services by taking a risk proportionate approach: tailoring our safety measures to the risk and to the unique service. Across our consumer services, Microsoft does not allow the sharing or creation of sexually intimate images of someone without their permission. This includes photorealistic NCII content that was created or altered using technology. We do not allow NCII to be distributed on our services, nor do we allow any content that praises, supports, or requests NCII.

Additionally, Microsoft does not allow any threats to share or publish NCII — also called intimate extortion. This includes asking for or threatening a person to get money, images, or other valuable things in exchange for not making the NCII public. In addition to this comprehensive policy, we have tailored prohibitions in place where relevant, such as for the Microsoft Store. The Code of Conduct for Microsoft Generative AI Services also prohibits the creation of sexually explicit content.

Reporting content directly to Microsoft

We will continue to remove content reported directly to us on a global basis, as well as where violative content is flagged to us by NGOs and other partners. In search, we also continue to take a range of measures to demote low quality content and to elevate authoritative sources, while considering how we can further evolve our approach in response to expert and external feedback.

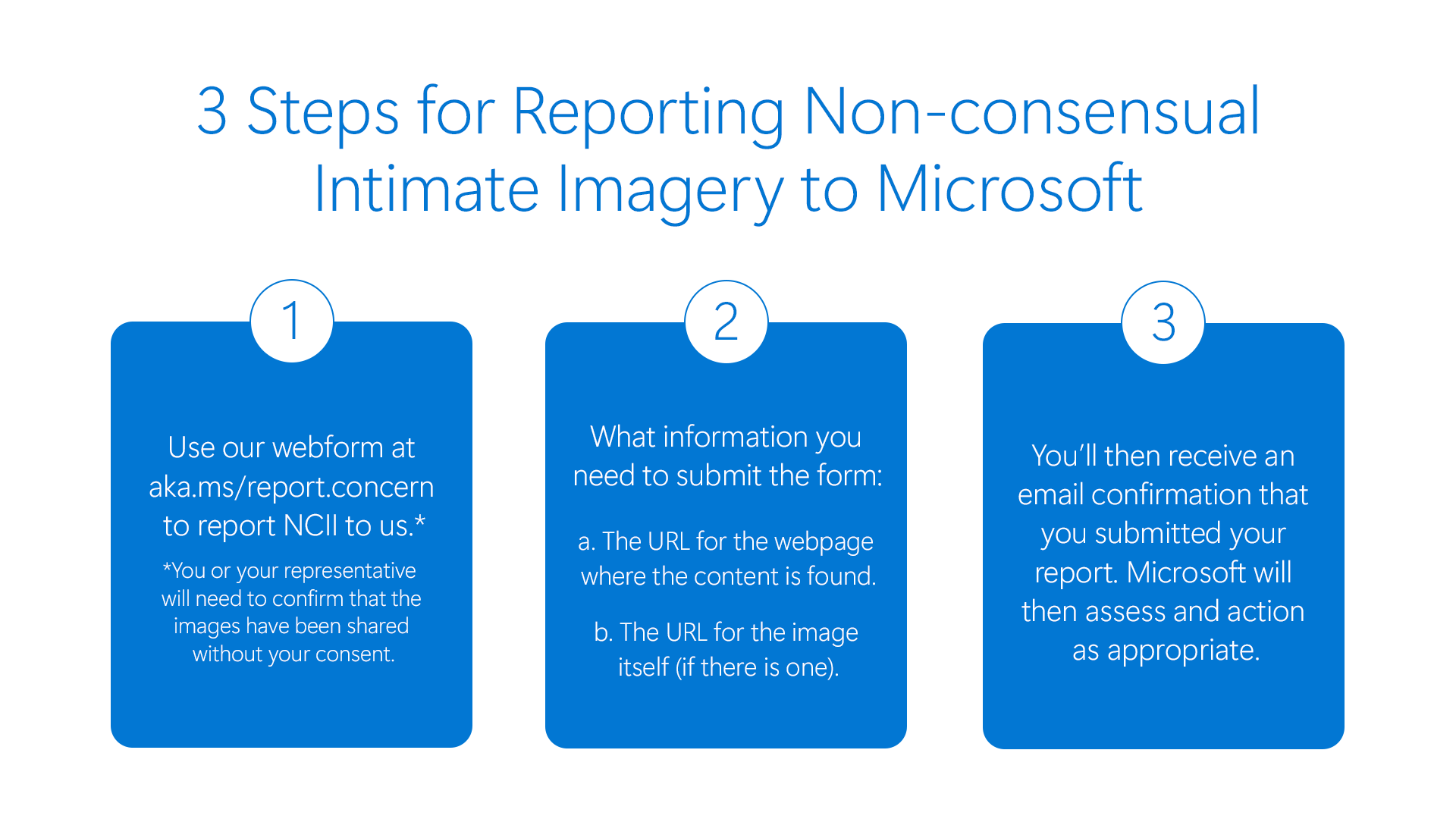

Anyone can request the removal of a nude or sexually explicit image or video of themselves which has been shared without their consent through Microsoft’s centralized reporting portal.

* NCII reporting is for users 18 years and over. For those under 18, please report as child sexual exploitation and abuse imagery.

Once that content has been reviewed by Microsoft and confirmed as violating our NCII policy, we remove reported links to photos and videos from search results in Bing globally and/or remove access to the content itself if it has been shared on one of Microsoft’s hosted consumer services. This approach applies to both real and synthetic imagery. Some services (such as gaming and Bing) also provide in-product reporting options. Finally, we provide transparency on our approach through our Digital Safety Content Report.

Continuing whole-of-society collaboration to meet the challenge

As we have seen illustrated vividly through recent, tragic stories, synthetic intimate image abuse also affects children and teens. In April, we outlined our commitment to new safety by design principles, led by NGOs Thorn and All Tech is Human, intended to reduce child sexual exploitation and abuse (CSEA) risks across the development, deployment, and maintenance of our AI services. As with synthetic NCII, we will take steps to address any apparent CSEA content on our services, including by reporting to the National Center for Missing and Exploited Children (NCMEC). Young people who are concerned about the release of their intimate imagery can also report to NCMEC’s Take It Down service.

Today’s update is but a point in time: these harms will continue to evolve and so too must our approach. We remain committed to working with leaders and experts worldwide on this challenge and to hearing perspectives directly from victims and survivors. Microsoft has joined a new multistakeholder working group, chaired by the Center for Democracy & Technology, Cyber Civil Rights Initiative, and National Network to End Domestic Violence and we look forward to collaborating through this and other forums on evolving best practices. We also commend the focus on this harm through the Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence and look forward to continuing to work with U.S. Department of Commerce’s National Institute of Standards & Technology and the AI Safety Institute on best practices to reduce risks of synthetic NCII, including as a problem distinct from synthetic CSEA. And, we will continue to advocate for policy and legislative changes to deter bad actors and ensure justice for victims, while raising awareness of the impact on women and girls.