The speed and scalability of data used in applications, which pairs closely with its cost, are critical components every development team cares about. This blog describes how we optimized Rockset’s hot storage tier to improve efficiency by more than 200%. We delve into how we architect for efficiency by leveraging new hardware, maximizing the use of available storage, implementing better orchestration techniques and using snapshots for data durability. With these efficiency gains, we were able to reduce costs while keeping the same performance and pass along the savings to users. Rockset’s new tiered pricing is as low as $0.13/GB-month, making real-time data more affordable than ever before.

Rockset’s hot storage layer

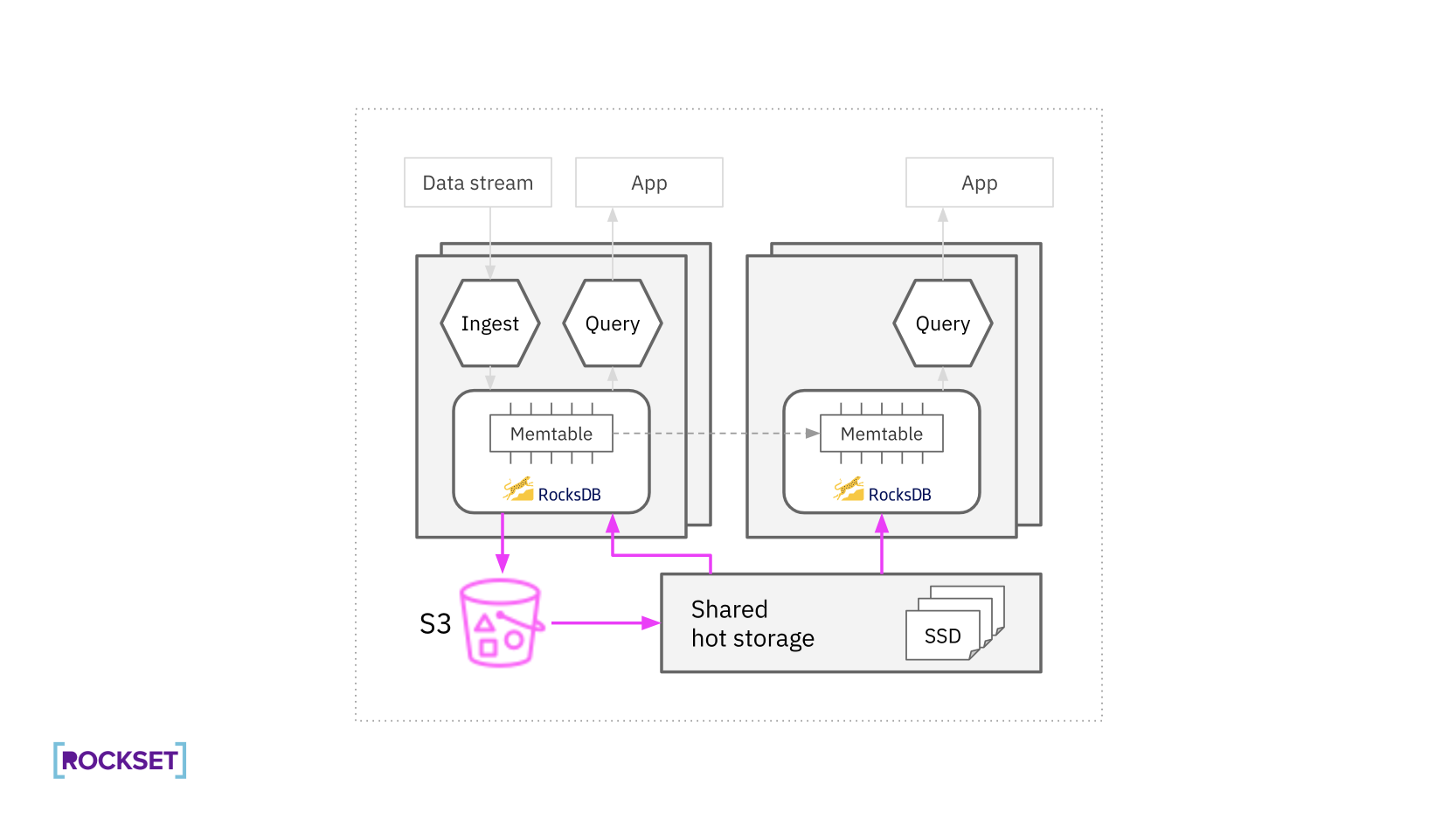

Rockset’s storage solution is an SSD-based cache layered on top of Amazon S3, designed to deliver consistent low-latency query responses. This setup effectively bypasses the latency traditionally associated with retrieving data directly from object storage and eliminates any fetching costs.

Rockset’s caching strategy boasts a 99.9997% cache hit rate, achieving near-perfection in caching efficiency on S3. Over the past year, Rockset has embarked on a series of initiatives aimed at enhancing the cost-efficiency of its advanced caching system. We focused efforts on accommodating the scaling needs of users, ranging from tens to hundreds of terabytes of storage, without compromising on the crucial aspect of low-latency performance.

Rockset’s novel architecture has compute-compute separation, allowing independent scaling of ingest compute from query compute. Rockset provides sub-second latency for data insert, updates, and deletes. Storage costs, performance and availability are unaffected from ingestion compute or query compute. This unique architecture allows users to:

- Isolate streaming ingest and query compute, eliminating CPU contention.

- Run multiple apps on shared real-time data. No replicas required.

- Fast concurrency scaling. Scale out in seconds. Avoid overprovisioning compute.

The combination of storage-compute and compute-compute separation resulted in users bringing onboard new workloads at larger scale, which unsurprisingly added to their data footprint. The larger data footprints challenged us to rethink the hot storage tier for cost effectiveness. Before highlighting the optimizations made, we first want to explain the rationale for building a hot storage tier.

Why Use a Hot Storage Tier?

Rockset is unique in its choice to maintain a hot storage tier. Databases like Elasticsearch rely on locally-attached storage and data warehouses like ClickHouse Cloud use object storage to serve queries that do not fit into memory.

When it comes to serving applications, multiple queries run on large-scale data in a short window of time, typically under a second. This can quickly cause out-of-memory cache misses and data fetches from either locally-attached storage or object storage.

Locally-Attached Storage Limitations

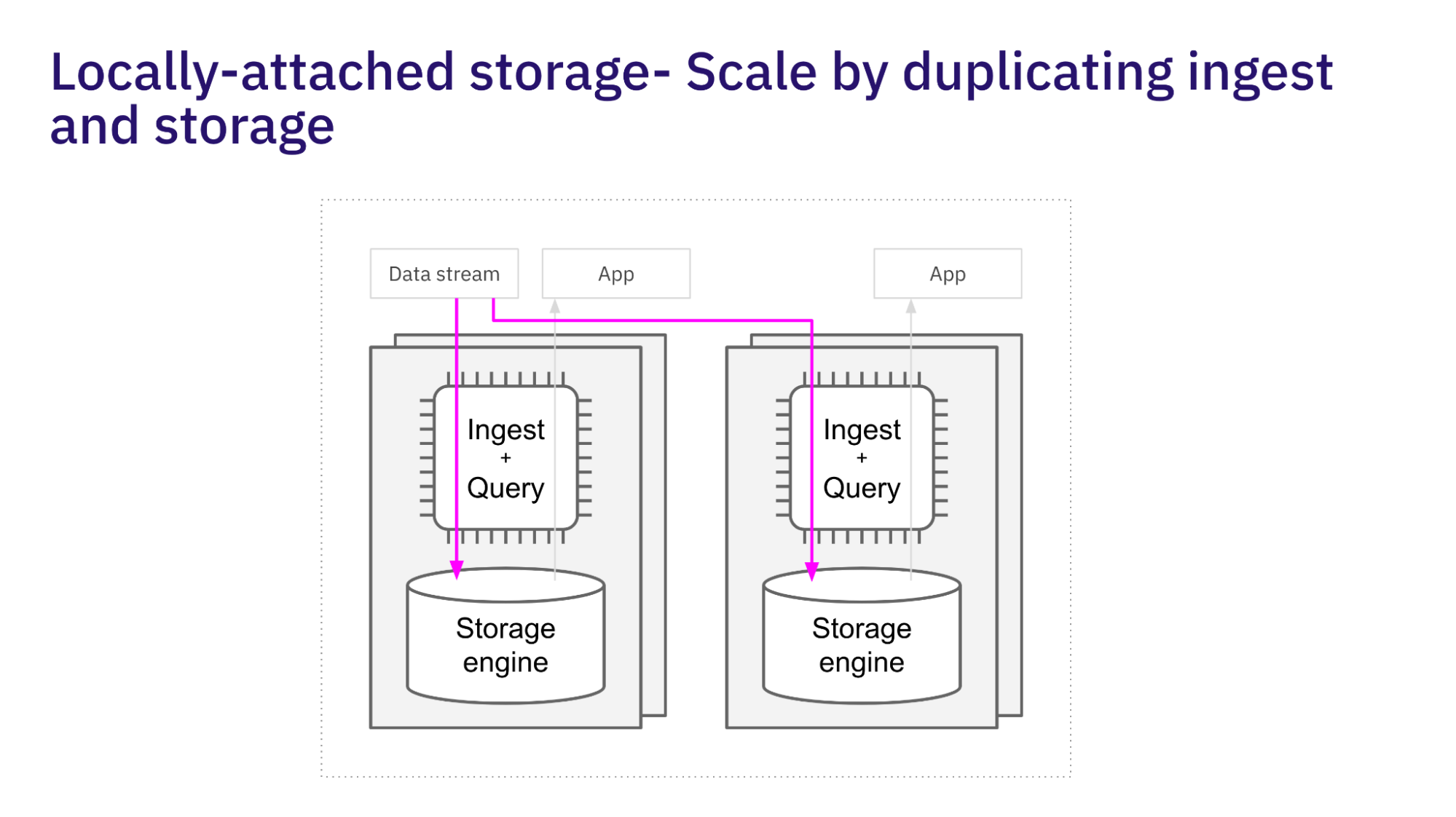

Tightly coupled systems use locally-attached storage for real-time data access and fast response times. Challenges with locally-attached storage include:

- Cannot scale data and queries independently. If the storage size outpaces compute requirements, these systems end up overprovisioned for compute.

- Scaling is slow and error prone. Scaling the cluster requires copying the data and data movement which is a slow process.

- Maintain high availability using replicas, impacting disk utilization and increasing storage costs.

- Every replica needs to process incoming data. This results in write amplification and duplication of ingestion work.

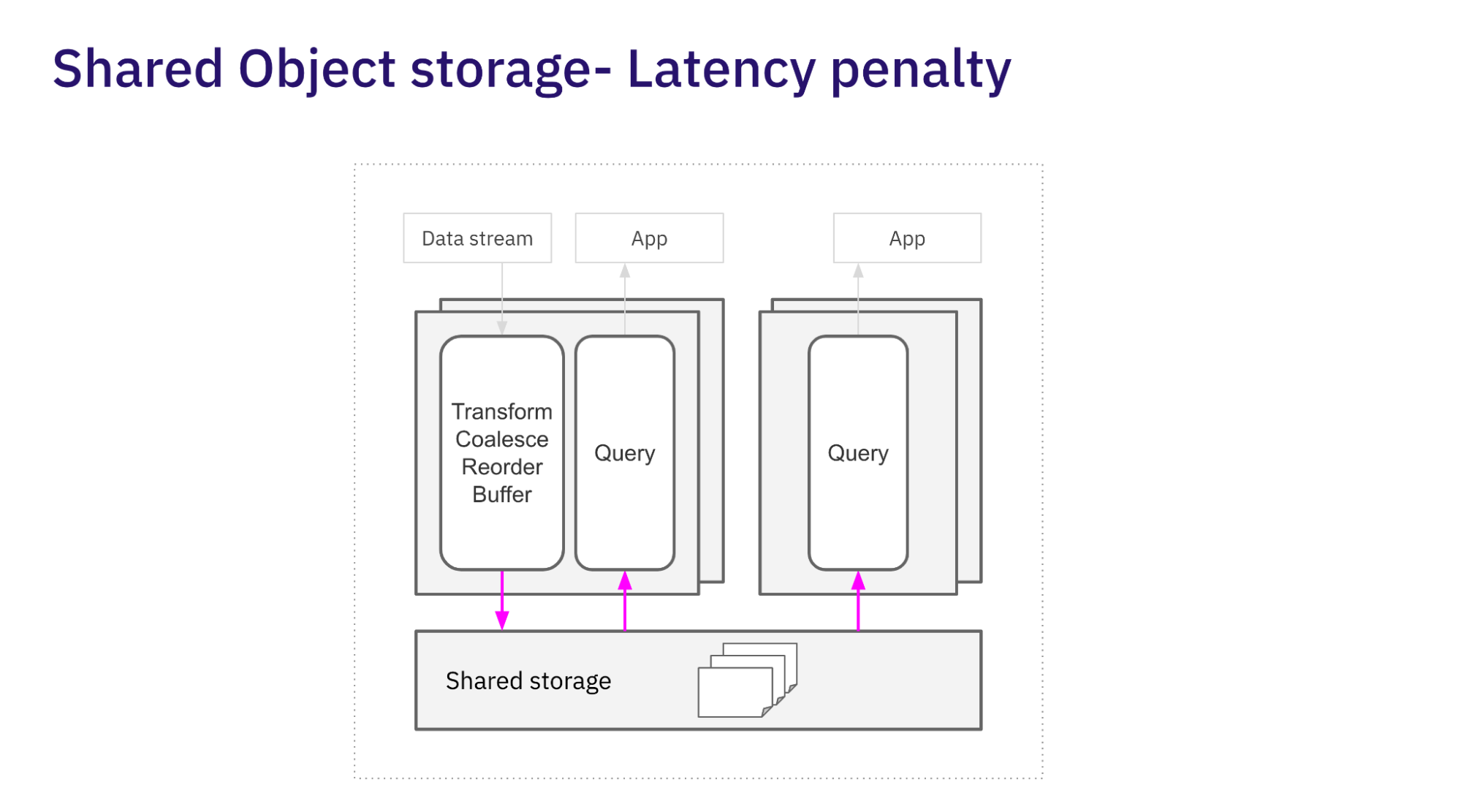

Shared Object Storage Limitations

Creating a disaggregated architecture using cloud object storage removes the contention issues with locally-attached storage. The following new challenges occur:

- Added latency, especially for random reads and writes. Internal benchmarking comparing Rockset to S3 saw <1 ms reads from Rockset and ~100 ms reads from S3.

- Overprovisioning memory to avoid reads from object storage for latency-sensitive applications.

- High data latency, usually in the order of minutes. Data warehouses buffer ingest and compress data to optimize for scan operations, resulting in added time from when data is ingested to when it is queryable.

Amazon has also noted the latency of its cloud object store and recently introduced S3 Xpress One Zone with single-digit millisecond data access. There are several differences to call out between the design and pricing of S3 Xpress One Zone and Rockset’s hot storage tier. For one, S3 Express One Zone is intended to be used as a cache in a single availability zone. Rockset is designed to use hot storage for fast access and S3 for durability. We also have different pricing: S3 Express One Zone prices include both per-GB cost as well as put, copy, post and list requests costs. Rockset’s pricing is only per-GB based.

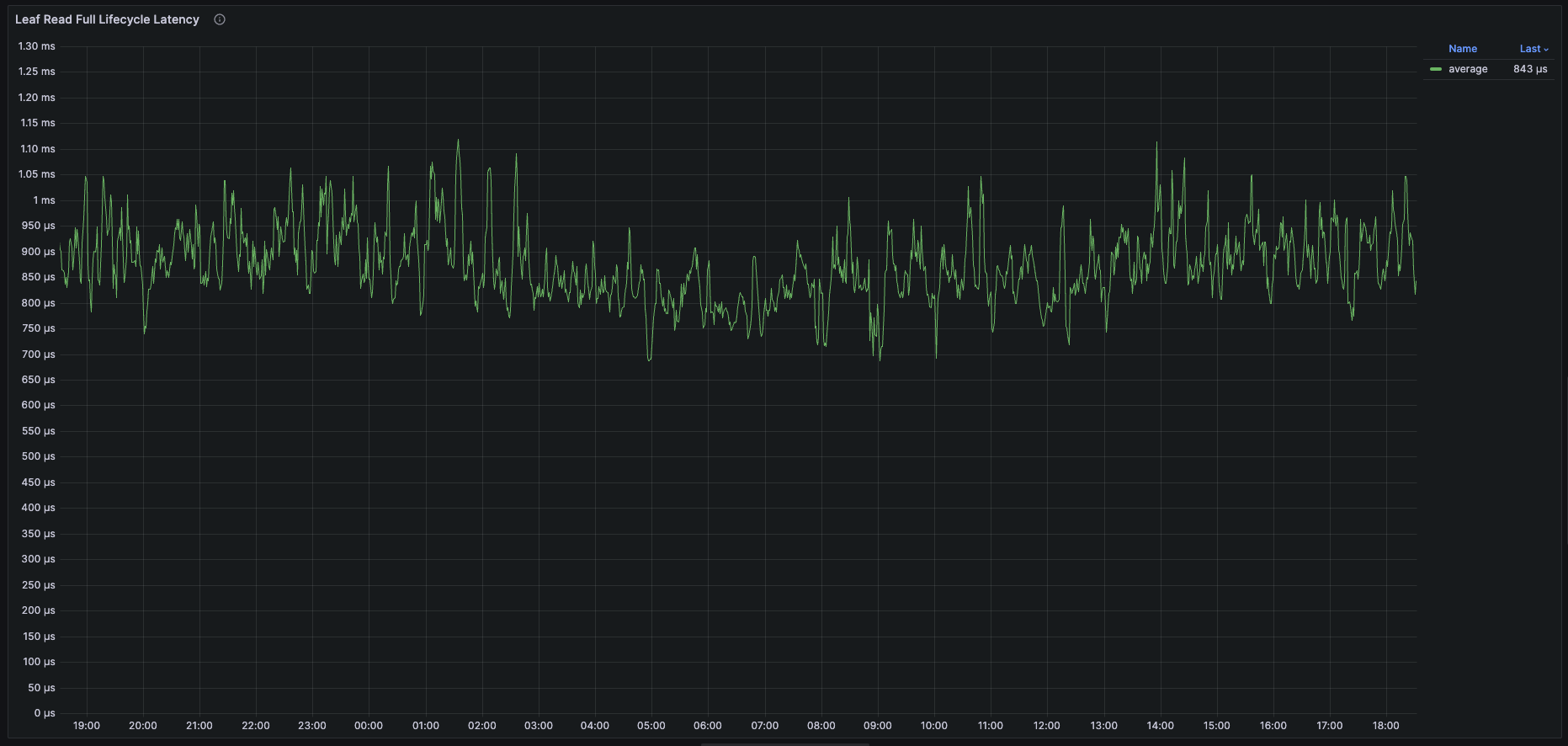

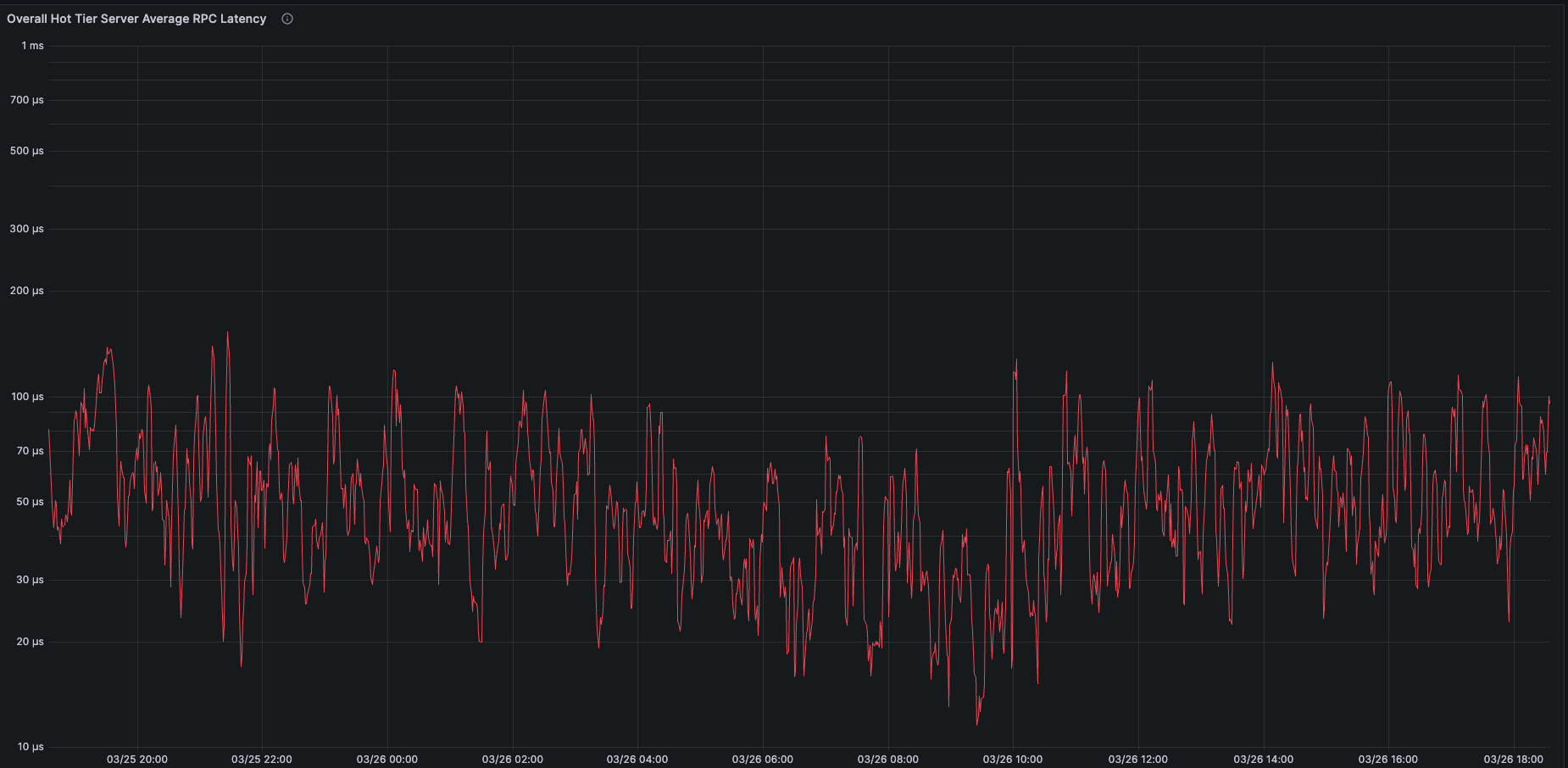

The biggest difference between S3 Xpress One Zone and Rockset is the performance. Looking at the graph of end-to-end latency from a 24 hour period, we see that Rockset’s mean latency between the compute node and hot storage consistency stays at 1 millisecond or below.

If we examine just server-side latency, the average read is ~100 microseconds or less.

Reducing the Cost of the Hot Storage Tier

To support tens to hundreds of terabytes cost-effectively in Rockset, we leverage new hardware profiles, maximize the use of available storage, implement better orchestration techniques and use snapshots for data recovery.

Leverage Cost-Efficient Hardware

As Rockset separates hot storage from compute, it can choose hardware profiles that are ideally suited for hot storage. Using the latest network and storage-optimized cloud instances, which provide the best price-performance per GB, we have been able to decrease costs by 17% and pass these savings on to customers.

As we observed that IOPS and network bandwidth on Rockset usually bound hot storage performance, we found an EC2 instance with slightly lower RAM and CPU resources but the same amount of network bandwidth and IOPS. Based on production workloads and internal benchmarking, we were able to see similar performance using the new lower-cost hardware and pass on savings to users.

Maximize available storage

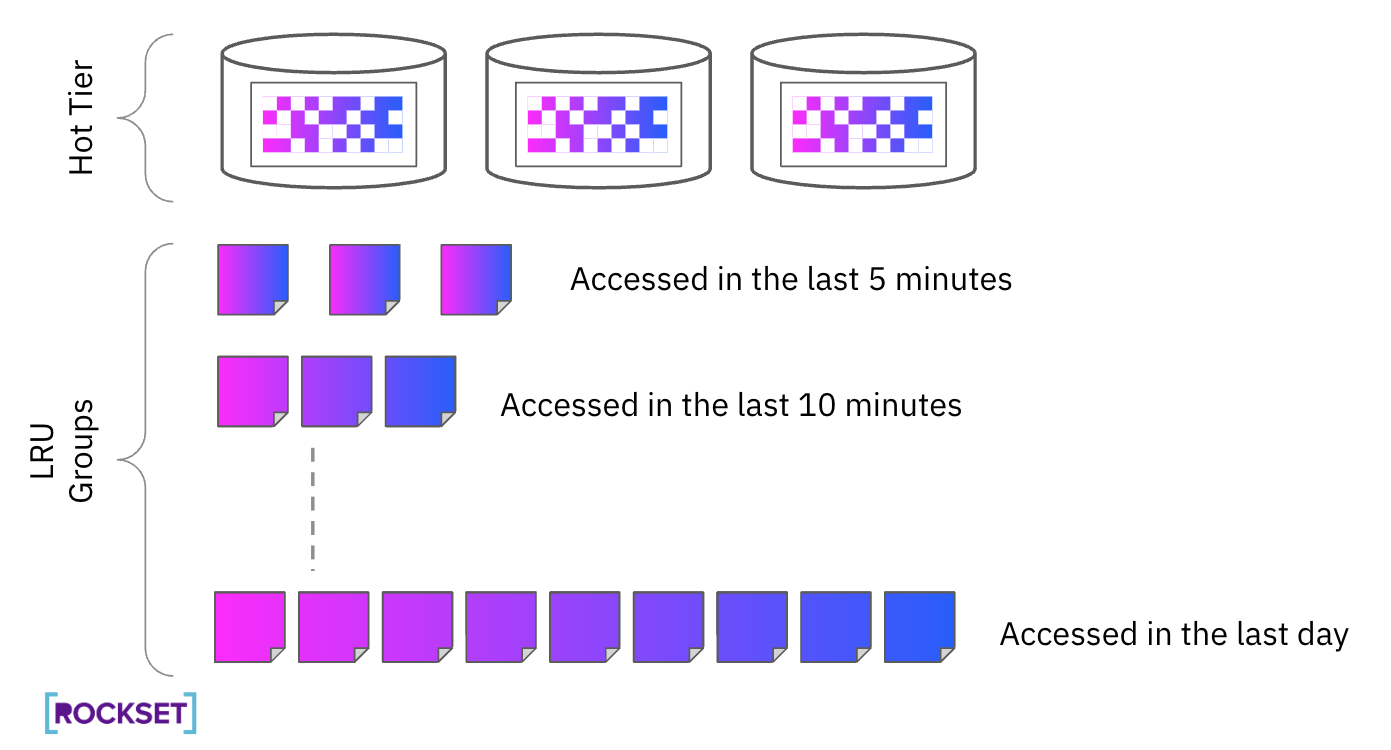

To maintain the highest performance standards, we initially designed the hot storage tier to contain two copies of each data block. This ensures that users get reliable, consistent performance at all times. When we realized two copies had too high an impact on storage costs, we challenged ourselves to rethink how to maintain performance guarantees while storing a partial second copy.

We use a LRU (Least Recently Used) policy to ensure that the data needed for querying is readily available even if one of the copies is lost. From production testing we found that storing secondary copies for ~30% of the data is sufficient to avoid going to S3 to retrieve data, even in the case of a storage node crash.

Implement Better Orchestration Techniques

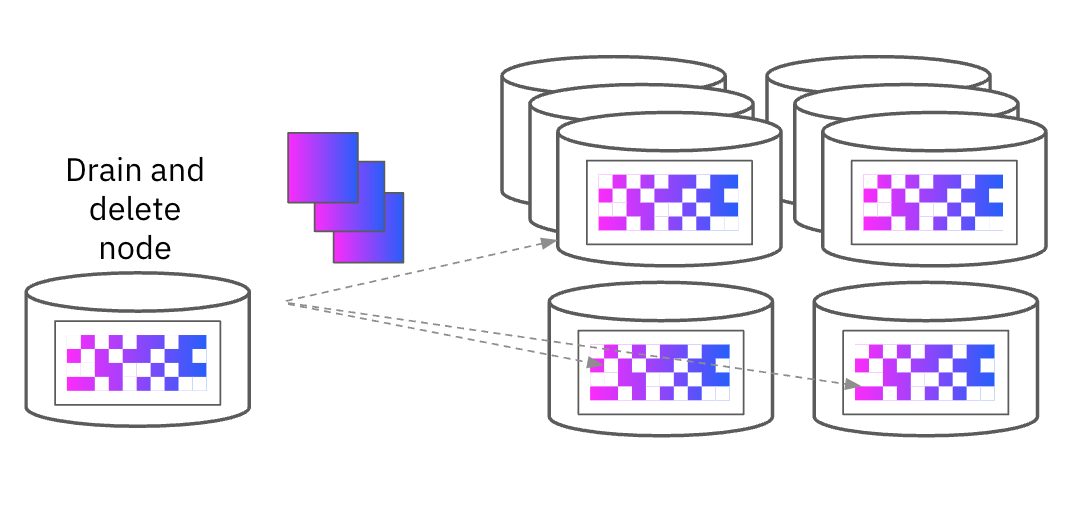

While adding nodes to the hot storage tier is straightforward, removing nodes to optimize for costs requires additional orchestration. If we removed a node and relied on the S3 backup to restore data to the hot tier, users could experience latency. Instead, we designed a “pre-draining” state where the node designated for deletion sends data to the other storage nodes in the cluster. Once all the data is copied to the other nodes, we can safely remove it from the cluster and avoid any performance impacts. We use this same process for any upgrades to ensure consistent cache performance.

Use Snapshots for Data Recovery

Initially, S3 was configured to archive every update, insertion and deletion of documents in the system for recovery purposes. However, as Rockset’s usage expanded, this approach led to storage bloat in S3. To address this, we implemented a strategy involving the use of snapshots, which reduced the volume of data stored in S3. Snapshots allow Rockset to create a low-cost frozen copy of data that can be restored from later. Snapshots do not duplicate the entire dataset; instead, they only record the changes since the previous snapshot. This reduced the storage required for data recovery by 40%.

Hot storage at 100s of TBs scale

The hot storage layer at Rockset was designed to offer predictable query performance for in-application search and analytics. It creates a shared storage layer that any compute instance can access.

With the new hot storage pricing as low as $0.13 / GB-month, Rockset is able to support workloads in the 10s to 100s of terabytes cost effectively. We’re continuously looking to make hot storage more affordable and pass along cost savings to customers. So far, we have optimized Rockset’s hot storage tier to improve efficiency by more than 200%.

You can learn more about the Rockset storage architecture using RocksDB on the engineering blog and also see storage pricing for your workload in the pricing calculator.