As a search and analytics database, Rockset powers many personalization, anomaly detection, AI, and vector applications that need fast queries on real-time data. Rockset maintains inverted indexes for data, enabling it to efficiently run search queries without scanning over all of the data. We also maintain column stores that allow efficient analytic queries. Read up on converged indexing to learn more about indexing in Rockset.

Inverted indexes are the fastest way to find the rows matching a selective query, but after the rows are identified Rockset, needs to fetch the correlated values from other columns. This can be a bottleneck. In this blog post we will talk about how we made this step much faster, yielding a 4x speedup for customers’ search-like queries.

Fast Search Performance for Modern Applications

For many real-time applications, the ability to execute search queries with millisecond latency at high queries per second (QPS) is essential. For example, check out how Whatnot uses Rockset as their backend for real-time personalization.

This blog presents how we improved the performance of search query CPU utilization and latency by analyzing search-related workloads and query patterns. We take advantage of the fact that, for search-related workloads, the working set usually fits in memory, and focus on improving the in-memory query performance.

Analyzing Search Query Performance in Rockset

Assume we are building the backend for real-time product recommendations. To achieve this, we need to retrieve a list of products, given a city, that can be displayed on the website in decreasing order of their probability of being clicked. To achieve this, we can execute the following example query:

SELECT product_id, SUM(CAST(clicks as FLOAT)) / (SUM(CAST(impressions as FLOAT) + 1.0)) AS click_through_rate

FROM product_clicks p

WHERE city = 'UNITED ST2'

GROUP BY product_id

ORDER BY click_through_rate DESC

Certain cities are of particular interest. Assuming that the data for frequently accessed cities fits in memory, all of the indexing data is stored in RocksDB block cache, the built in cache provided by RocksDB. RocksDB is our data store for all of the indexes.

The product_clicks view contains 600 million documents. When the city filter is applied, around 2 million documents are emitted, which represents approximately 0.3 percent of the total number of documents. There are two possible execution plans for the query.

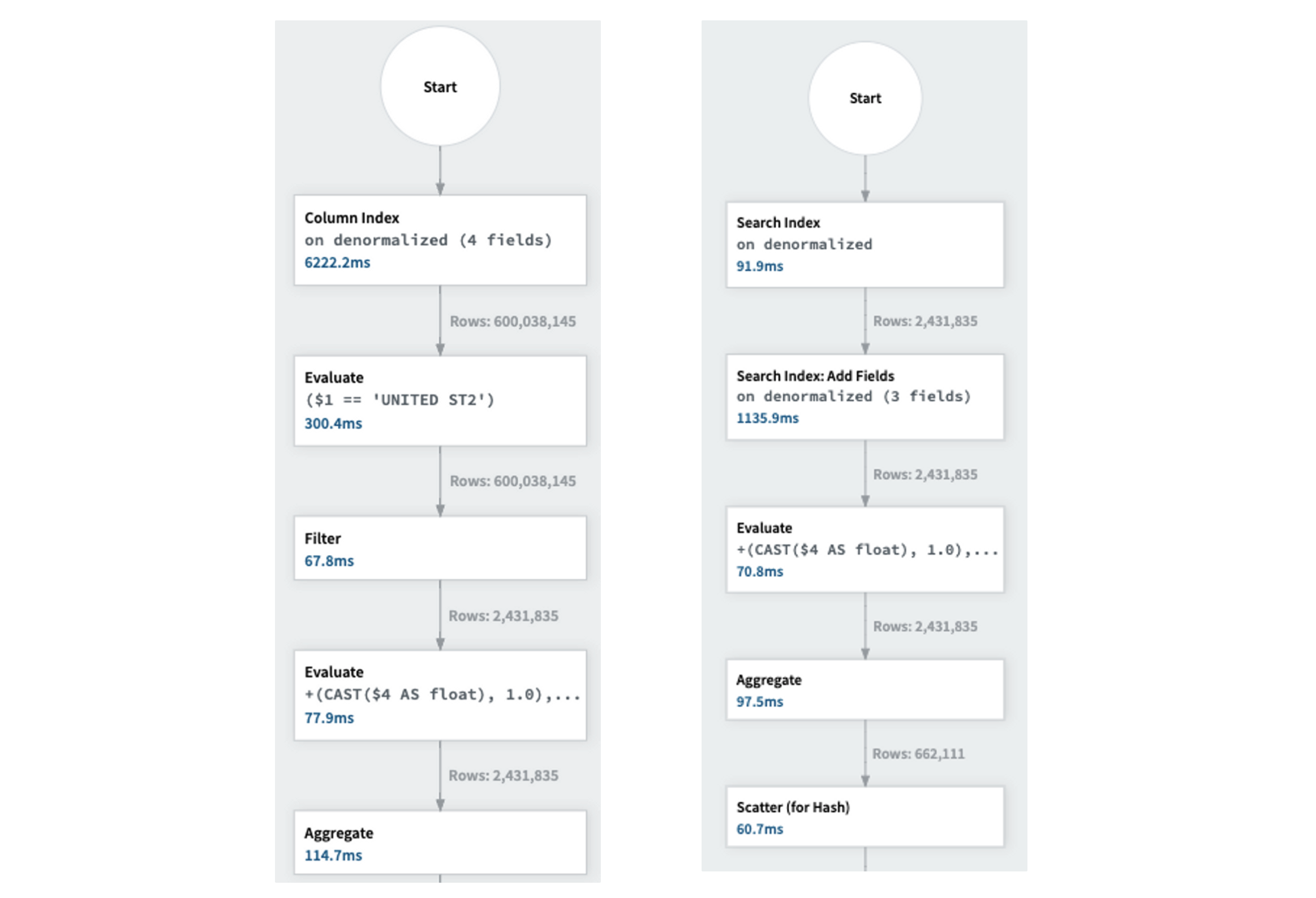

- The cost-based optimizer (CBO) has the option to use the column store to read the necessary columns and filter out unneeded rows. The execution graph on the left of Figure 1 shows that reading the required columns from the column store takes 5 seconds due to the large collection size of 600 million documents.

Figure 1: Query execution using column store on the left. Query execution using inverted/search index on the right.

- To avoid scanning the entire column, the CBO utilizes the inverted index. This enables the retrieval of only the required 2M documents, followed by fetching the required column values for those documents. The execution graph is on the right of Figure 1.

The execution plan when using the inverted index is more efficient than that when using the column store. The Cost-Based Optimizer (CBO) is sophisticated enough to select the appropriate execution plan automatically.

What Is Taking Time?

Let’s examine the bottlenecks in the inverted index execution plan shown in Figure 1 and identify opportunities for optimization. The query executes mainly in three steps:

- Retrieve the document identifiers from the inverted index.

- Obtain the document values using the identifiers from the Row Store. The row store is an index that is part of the converged index, mapping a document identifier to the document value.

- Fetch the required columns from the document values (i.e. product_id, clicks, impressions).

- The combination of steps 2 and 3 is called the

Add Fields operation.

As shown in the execution graph, the Add Fields operation is very CPU-intensive and takes a disproportionate amount of time in query execution. It accounts for 1.1 seconds of the total CPU time of 2 seconds for the query.

Why Is This Taking Time?

Rockset uses RocksDB for all the indexing strategies mentioned above. RocksDB utilizes an in-memory cache, called the block cache, to store the most recently accessed blocks in memory. When the working set fits in memory, the blocks corresponding to the row store are also present in memory. These blocks contain multiple key-value pairs. In the case of the row store, the pairs take the form of (document identifier, document value). The Add Fields operation, is responsible for retrieving document values given a set of document identifiers.

Retrieving a document value from the block cache based on its document identifier is a CPU-intensive process. This is because it involves several steps, primarily determining which block to look for. This is accomplished through a binary search on a RocksDB internal index or by performing multiple lookups with a multi-level RocksDB internal index.

We observed that there is room for optimization by introducing a complementary in-memory cache – a hash table that directly maps document identifiers to document values. We call this complementary cache the RowStoreCache.

RowStoreCache: Complementing the RocksDB Block Cache

The RowStoreCache is a Rockset-internal complementary cache to the RocksDB block cache for the row store.

- The RowStoreCache is an in-memory cache that uses MVCC and acts as a layer above the RocksDB block cache.

- The RowStoreCache stores the document value for a document identifier the first time the document is accessed.

- The cache entry is marked for deletion when the corresponding document receives an update. However, the entry is only deleted when all earlier queries referencing it have finished executing. To determine when the cache entry can be removed, we use the sequence number construct provided by RocksDB.

- The sequence number is a monotonically increasing value that increments on any update to the database. Each query reads the data at a specified sequence number, which we refer to as a snapshot of the database. We maintain an in-memory data structure of all the snapshots currently in use. When we determine that a snapshot is no longer in use because all the queries referencing it have been completed, we know that the corresponding cache entries at the snapshot can be freed.

- We enforce an LRU policy on the RowStoreCache and use time-based policies to determine when a cache entry should be moved on access within the LRU list or removed from it.

Design and implementation.

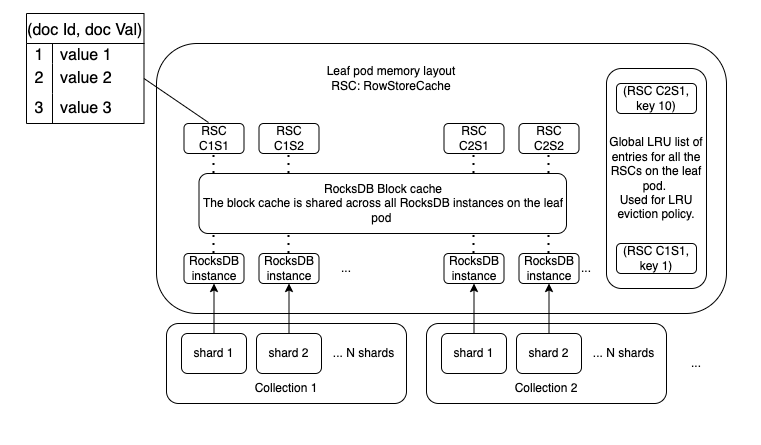

Figure 2 shows the memory layout of the leaf pod, which is the primary execution unit for distributed query execution at Rockset.

Figure 2: Leaf pod memory layout with the RocksDB block cache and the RowStore caches. (\RSC C1S1: RowStoreCache for collection 1 shard 1.)*

In Rockset, each collection is divided into N shards. Each shard is associated with a RocksDB instance responsible for all the documents and corresponding converged indexes within that shard.

We implemented the RowStoreCache to have a one-to-one correspondence with each shard and a global LRU list to enforce LRU policies on the leaf pod.

Each entry in the RowStoreCache contains the document identifier, the document value, the RocksDB sequence number at which the value was read, the latest RocksDB sequence number at which the entry has seen an update, and a mutex to guard access to the entry by multiple threads concurrently. To support concurrent operations on the cache, we use folly::ConcurrentHashMapSIMD.

Operations on the RowStoreCache

-

RowStoreCache::Get(RowStoreCache, documentIdentifier, rocksDBSequenceNumber)

This operation is straightforward. We check if the documentIdentifier is present in the RowStoreCache.

- If it is present and the document has not received any updates between the sequence number it was read and the current sequence number it is queried at, we return the corresponding value. The entry is also moved to the top of the global LRU list of entries so that the entry is evicted last.

- If it is not present, we fetch the value corresponding to the document identifier from the RocksDB instance and set it in the RowStoreCache.

-

RowStoreCache::Set(RowStoreCache, documentIdentifier, documentValue, rocksDBSequenceNumber)

- If the get operation did not find the documentIdentifier in the cache, we try to set the value in the cache. Since multiple threads can try to insert the value corresponding to a documentIdentifier concurrently, we need to ensure that we only insert the value once.

- If the value is already present in the cache, we set the new value only if the entry is not marked to be deleted and the entry corresponds to a later sequence number than the one already present in the cache.

-

EnsureLruLimits

- When an entry is added to the global LRU list of entries and we need to reclaim memory, we identify the least recently accessed entry and its corresponding RowStoreCache.

- We then remove the entry from the corresponding RowStoreCache and unlink it from the global LRU list if the information about updates in the entry to the document is not relevant.

Performance Improvements with the RowStoreCache

Latency Improvements

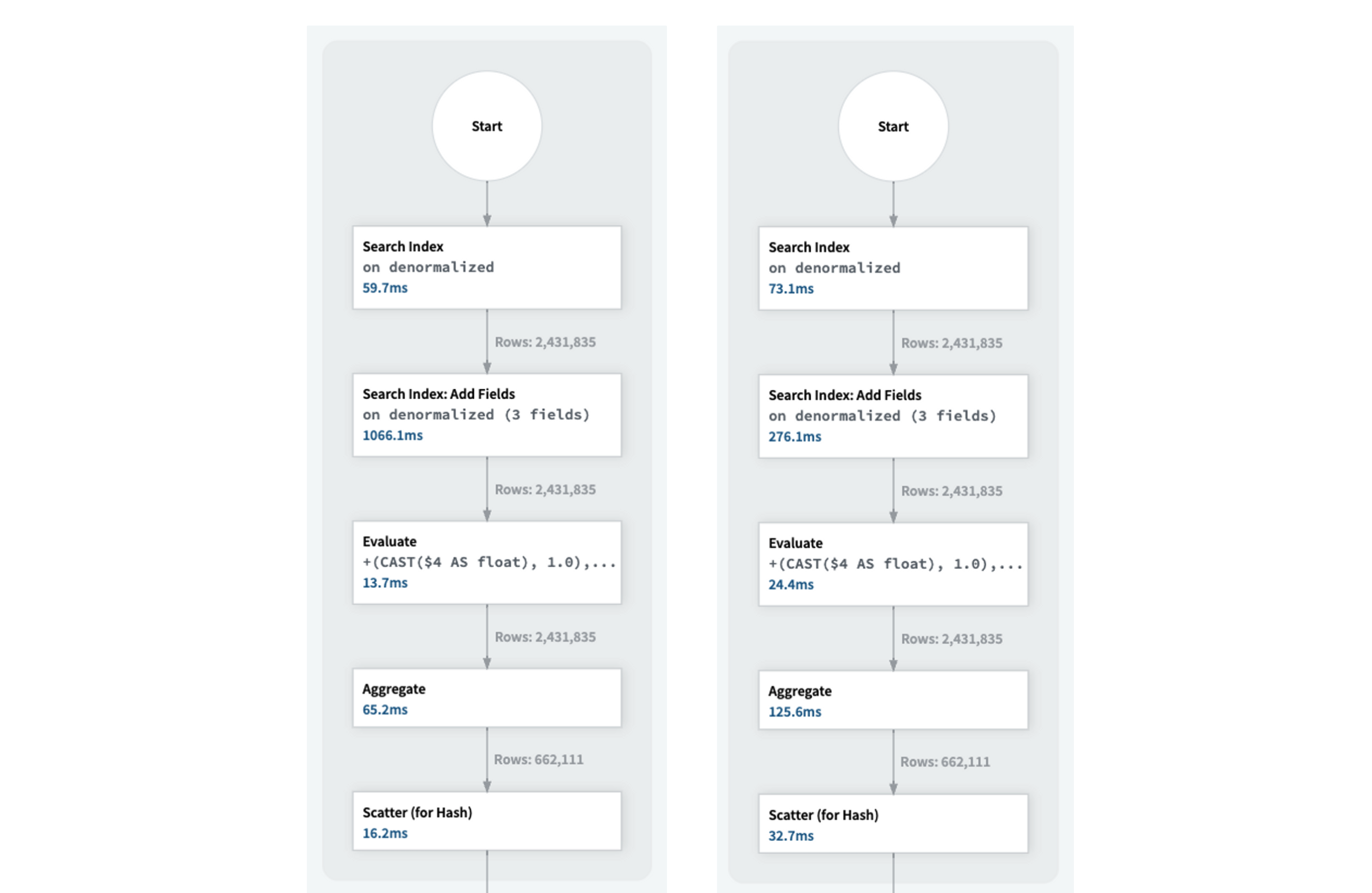

Enabling the RowStoreCache in the example query resulted in a 3x improvement in query latency, reducing it from 2 seconds to 650 milliseconds.

Figure 3: Query execution without the RowStoreCache on the left. Query execution with the RowStoreCache on the right.

Figure 3 shows that the “Add fields” operation took only 276 milliseconds with the RowStoreCache, compared to 1 second without it.

QPS Improvements

Executing the example query with different filters for the city at high QPS showed an improvement in QPS from 2 queries per second to 7 queries per second, in line with the decrease in latency per query.

This represents a 3x improvement in QPS for the example query.

The capacity of the RowStoreCache can be tuned based on the workload for optimal performance.

We have observed similar performance improvements of up to 4x in query latency and QPS for various search queries from multiple customers using the RowStoreCache.

Conclusion

We are constantly striving to improve our caching strategy to achieve the best query performance. The RowStoreCache is a new addition to our caching stack, and results have shown it to be effective at improving search query performance, on both latency and QPS metrics.

Blog authors: Nithin Venkatesh and Nathan Bronson, software engineers at Rockset.